Azure Data Lake Storage (Legacy) (deprecated)

The Azure Data Lake Storage (Legacy) destination writes data to Microsoft Azure Data Lake Storage Gen1.

You can use the Azure Data Lake Storage (Legacy) destination in standalone and cluster batch pipelines. The destination supports connecting to Azure Data Lake Storage Gen1 using Azure Active Directory service principal authentication. To use Azure Active Directory refresh-token authentication or to use the destination in a cluster streaming pipeline, use the Hadoop FS destination.

Before you use the destination, you must perform some prerequisite tasks.

When you configure the Azure Data Lake Storage (Legacy) destination, you specify connection information such as the Application ID and fully qualified domain name (FQDN) for the account.

You can define a directory template and time basis to determine the output directories that the destination creates and the files where records are written. You can also define a file prefix and suffix, the data time zone, and properties that define when the destination closes a file.

Alternatively, you can write records to the specified directory, use the defined Avro schema, and roll files based on record header attributes. For more information, see Record Header Attributes for Record-Based Writes.

The destination can also generate events for an event stream. For more information about the event framework, see Dataflow Triggers Overview.

Prerequisites

- In Active Directory, create a Data Collector web application.

- Retrieve information from Azure to configure the destination.

- Grant execute permission to the Data Collector web application.

If the steps below are no longer accurate, you might try the following article or check for updates to the Microsoft Azure Data Lake Storage Gen1 documentation.

After you complete all of the prerequisite tasks, you can configure the Azure Data Lake Storage (Legacy) destination.

Step 1. Create a Data Collector Web Application

To allow writing to Microsoft Azure Data Lake Storage Gen1, add a Data Collector web application to Azure Active Directory.

- Log in to the Azure portal: https://portal.azure.com.

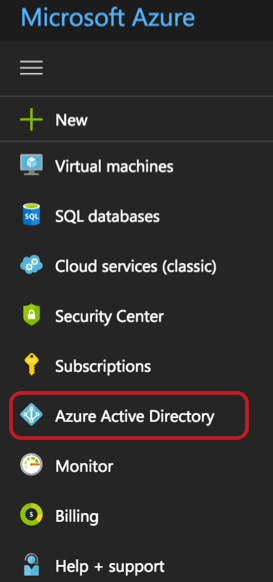

- In the Navigation panel, scroll down and click Azure Active

Directory.

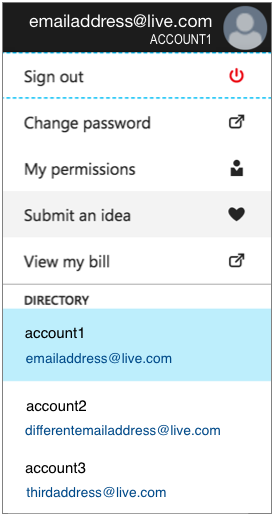

- If you have multiple accounts and need to select a different account, in the

upper right hand corner, click your user name, then select the account to use.

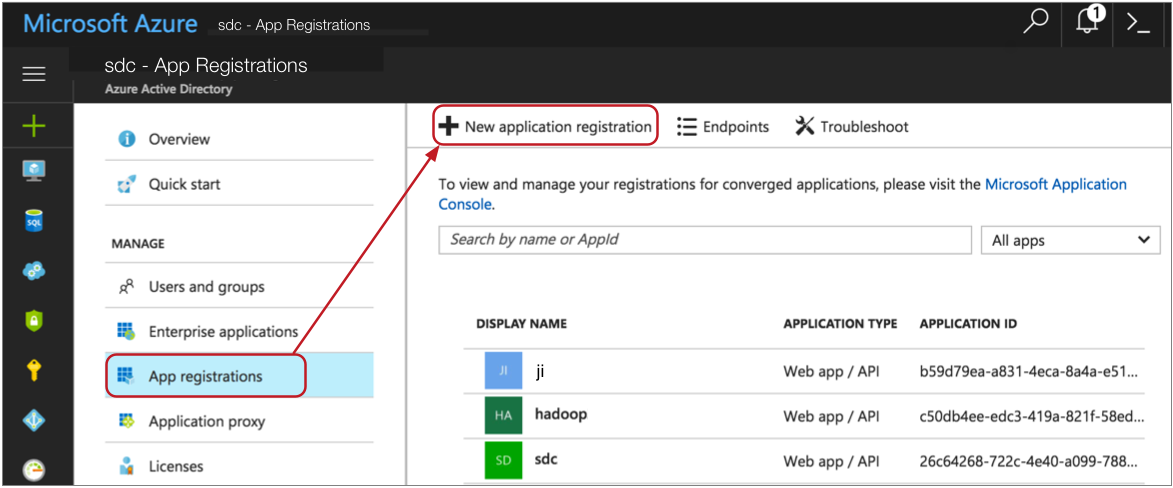

- To create an application, in the menu, select App

Registrations, then click New Application

Registration.

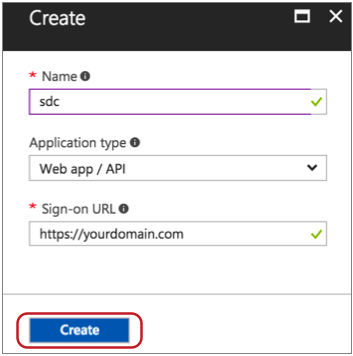

- On the Create page, enter the following information:

- Name: Enter an application name, such as "sdc".

- Application Type: Use the default, Web App / API.

- Sign-on URL: Enter a URL that describes the application. You can use any URL, such as "http://yourdomain.com".

- Click Create.

Active Directory creates the application and lists all available applications.

Step 2. Retrieve Information from Azure

- Auth Token Endpoint

- Application ID

- Application Key

- Account FQDN

- Retrieve the OAuth 2.0 Token Endpoint

-

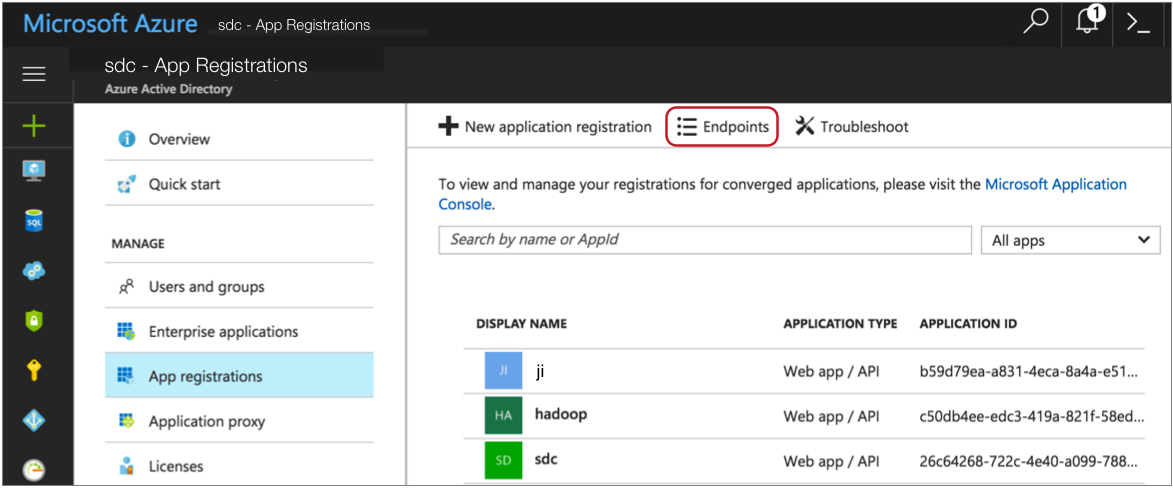

- If continuing directly from creating a new

application, above the list of applications on the App

Registrations page, select

Endpoints.

Otherwise, log in to the new Azure portal: https://portal.azure.com/. If you have more than one account, select the account to use. Click Azure Active Directory, then click App Registrations.

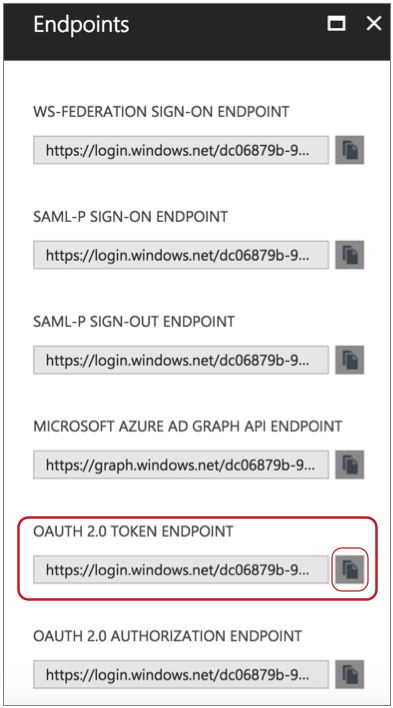

- In the Endpoints window, locate and copy the

OAuth 2.0 Token Endpoint URL.

When you configure the Azure Data Lake Storage (Legacy) destination, use this URL for the Auth Token Endpoint stage property.

- In the upper right corner of the Endpoints window, click the Close icon to close the window.

- If continuing directly from creating a new

application, above the list of applications on the App

Registrations page, select

Endpoints.

- Note the Application ID

-

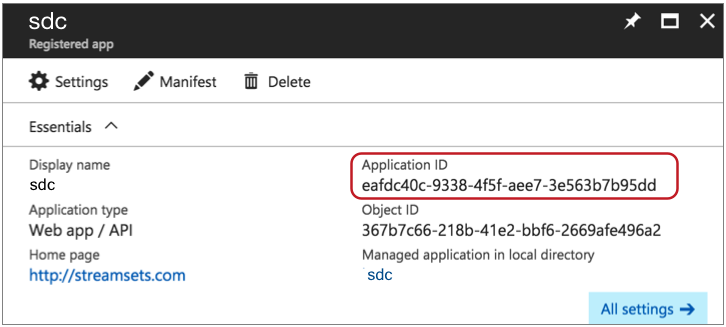

- On the App Registrations page,

click the new account that you created.

The Properties page appears.

- Copy the Application ID.

When you configure the Azure Data Lake Storage (Legacy) destination, use this value for the Application ID stage property.

- On the App Registrations page,

click the new account that you created.

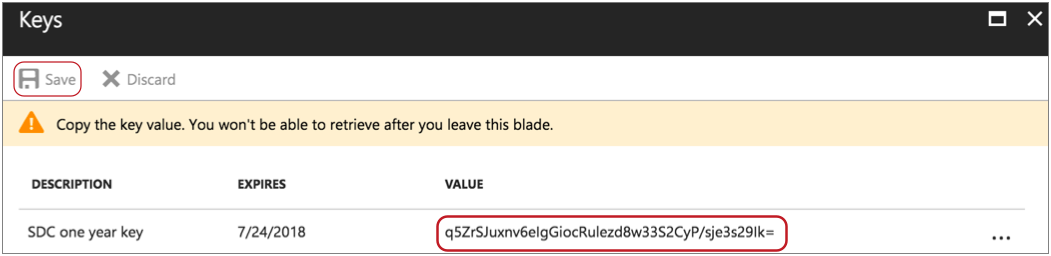

- Generate and copy the Application Key

-

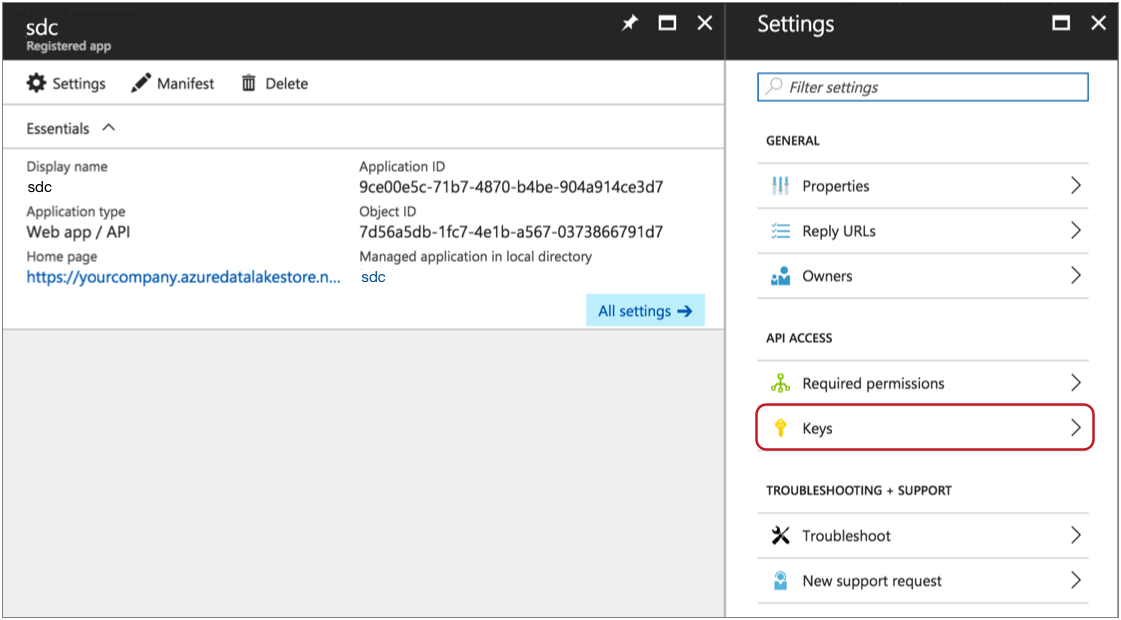

- With the new application selected, in the

Settings list, select

Keys.

- If you already have a key generated, copy the key.

Otherwise, to generate a key:

- Optionally enter a description for the key.

- Select a duration of time for the key to remain valid.

- Click Save to generate the key.

- Copy the generated key immediately.

When you configure the Azure Data Lake Storage (Legacy) destination, use this key for the Application Key stage property.

- With the new application selected, in the

Settings list, select

Keys.

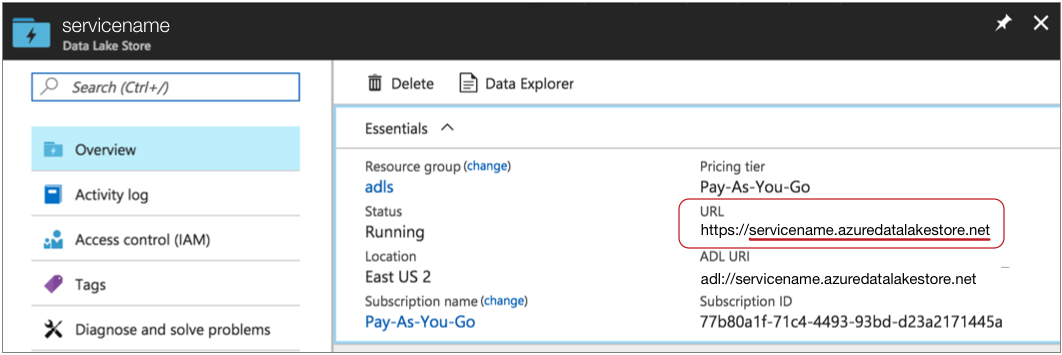

- Retrieve the Account FDQN

-

- In the Navigation panel, select the All

Resources icon:

- From the All Resources list, select the Data Lake Storage resource to use.

- In the Essentials page, note the

host name in the URL:

When you configure the Azure Data Lake Storage (Legacy) destination, use the host name from this URL for the Account FQDN stage property. In this case, the host name is

servicename.azuredatalakestore.net.

- In the Navigation panel, select the All

Resources icon:

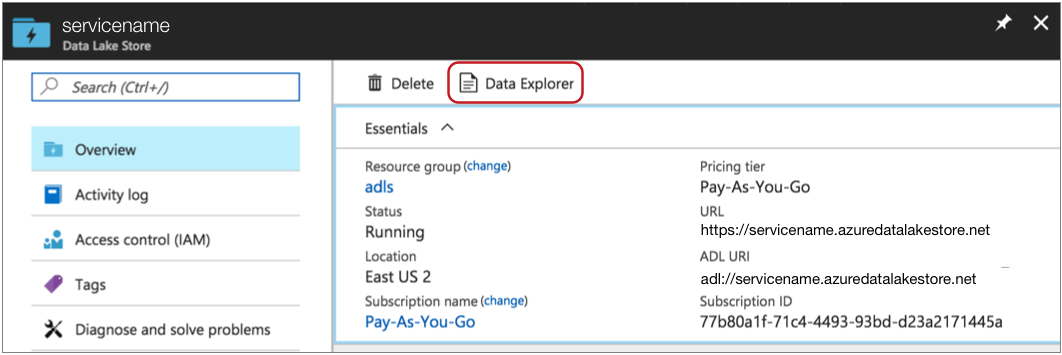

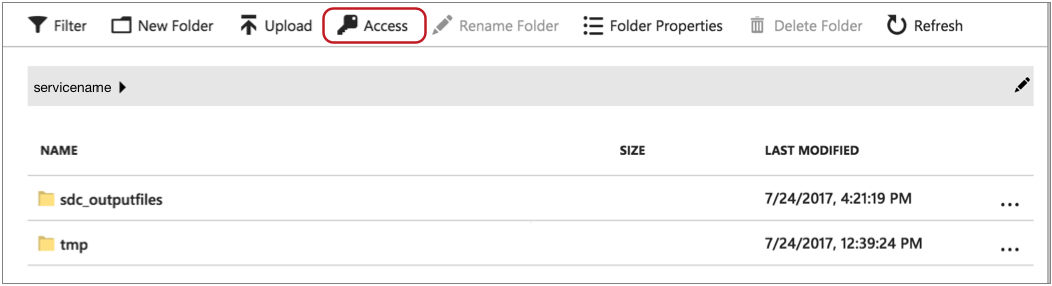

Step 3. Grant Execute Permission

To allow the Azure Data Lake Storage (Legacy) destination to write to Microsoft Azure Data Lake Storage Gen1, grant execute permission to the Data Collector web application for the folders that you want to use. When using directory templates in the destination, be sure to include all subfolders.

- If continuing directly from retrieving details from Azure, in the navigation

panel, click Data Explorer.

Otherwise, log in to the new Azure portal: https://portal.azure.com/. From the All Resources list, select the Data Lake Storage resource to use, then click Data Explorer.

- If necessary, click New Folder and create the folders that you want to use.

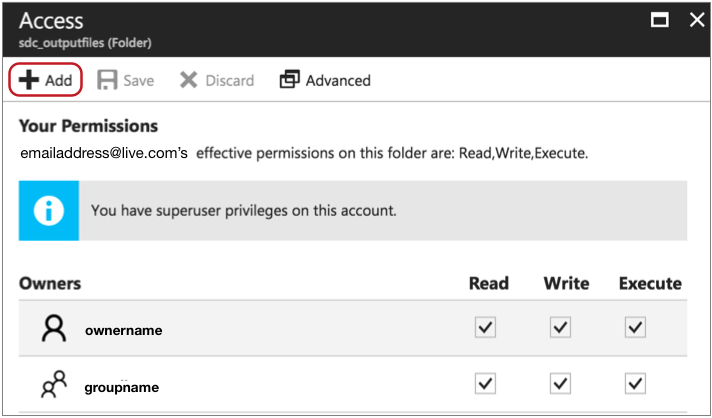

- To grant write access to a folder, select the folder, and then click

Access.

The Access panel displays any existing permissions.

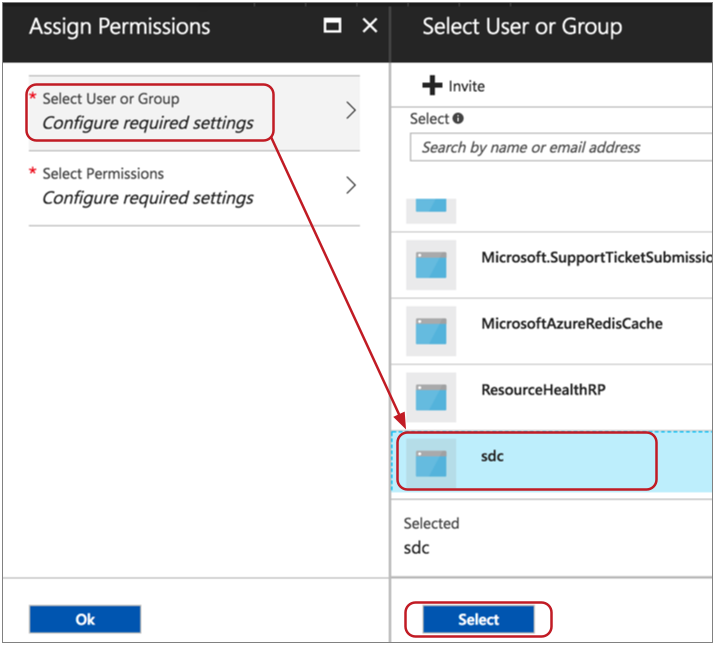

- To add the Data Collector web application as a user, in the Access panel, click the

Add icon.

- In the Assign Permissions panel, select Select User or Group.

- In the Select User or Group panel, scroll and select the

Data Collector web application that you created, and click

Select.

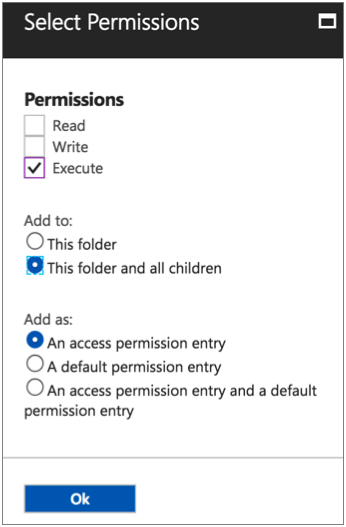

- In the Select Permissions panel, configure the following

properties:

- For Permissions, select Execute to allow Data Collector to write to the folder.

- For Add to, select This folder and all children.

- For Add as, you can use the default, An access permission entry.

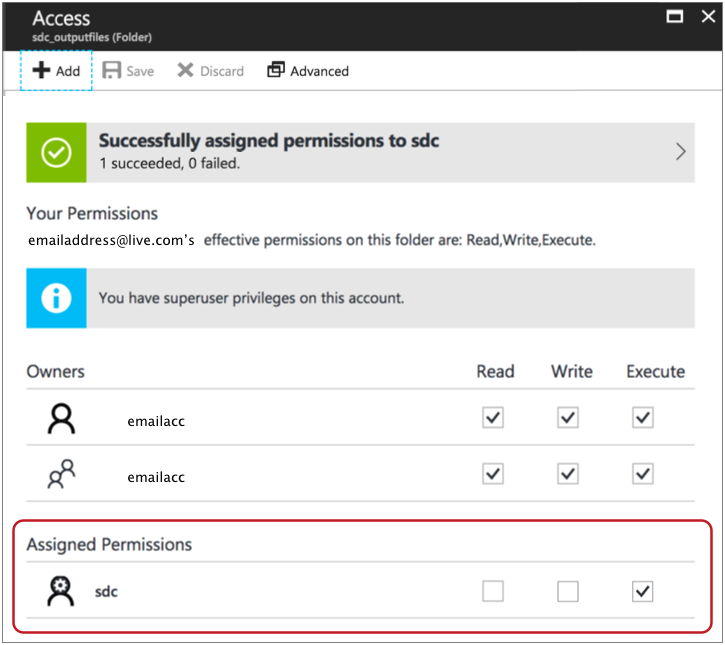

Click Ok to save your changes.

The Data Collector web application displays in the Assigned Permissions section of the Access panel.

Now that all prerequisite tasks are complete, you can configure the Azure Data Lake Storage (Legacy) destination.

Directory Templates

By default, the Azure Data Lake Storage (Legacy) destination uses directory templates to create output directories. The destination writes records to the directories based on the configured time basis.

You can alternatively write records to directories based

on the targetDirectory record header attribute. Using the

targetDirectory attribute disables the ability to define

directory templates.

When you define a directory template, you can use a mix

of constants, field values, and datetime variables. You can use the

every function to create new directories at regular

intervals based on hours, minutes, or seconds, starting on the hour. You can also

use the record:valueOrDefault function to create new directories

from field values or a default in the directory template.

/outputfiles/${record:valueOrDefault("/State", "unknown")}/${YY()}-${MM()}-${DD()}-${hh()}- Constants

- You can use any constant, such as

output. - Datetime Variables

- You can use datetime variables, such as

${YYYY()}or${DD()}. The destination creates directories as needed, based on the smallest datetime variable that you use. For example, if the smallest variable is hours, then the directories are created for every hour of the day that receives output records. everyfunction- You can use the

everyfunction in a directory template to create directories at regular intervals based on hours, minutes, or seconds, beginning on the hour. The intervals should be a submultiple or integer factor of 60. For example, you can create directories every 15 minutes or 30 seconds. record:valueOrDefaultfunction- You can use the

record:valueOrDefaultfunction in a directory template to create directories with the value of a field or the specified default value if the field does not exist or if the field is null:${record:valueOrDefault(<field path>, <default value>)}

Time Basis

When using directory templates, the time basis helps determine when directories are created. It also determines the directory that the destination uses when writing a record, and whether a record is late.

- Processing Time

- When you use processing time as the time basis, the destination creates directories based on the processing time and the directory template, and writes records to the directories based on when they are processed.

- Record Time

- When you use the time associated with a record as the time basis, you specify a Date field in the record. The destination creates directories based on the datetimes associated with the records and writes the records to the appropriate directories.

Timeout to Close Idle Files

You can configure the maximum time that an open output file can remain idle. After no records are written to an output file for the specified amount of time, the destination closes the file.

You might want to configure an idle timeout when output files remain open and idle for too long, thus delaying another system from processing the files.

- You configured the maximum number of records to be written to output files or the maximum size of output files, but records have stopped arriving. An output file that has not reached the maximum number of records or the maximum file size stays open until more records arrive.

- You configured a date field in the record as the time basis and have

configured a late record time limit, but records arrive in

chronological order. When a new directory is created, the output file

in the previous directory remains open for the configured late record

time limit. However, no records are ever written to the open file in

the previous directory.

For example, when a record with a datetime of 03:00 arrives, the destination creates a new file in a new 03 directory. The previous file in the 02 directory is kept open for the late record time limit, which is an hour by default. However, when records arrive in chronological order, no records that belong in the 02 directory arrive after the 03 directory is created.

In either situation, configure an idle timeout so that other systems can process the files sooner, instead of waiting for the configured maximum records, maximum file size, or late records conditions to occur.

Event Generation

The Azure Data Lake Storage (Legacy) destination can generate events that you can use in an event stream. When you enable event generation, Azure Data Lake Storage (Legacy) generates event records each time the destination completes writing to an output file or completes streaming a whole file.

- With the Email executor to send a custom email

after receiving an event.

For an example, see Sending Email During Pipeline Processing.

- With a destination to store event information.

For an example, see Preserving an Audit Trail of Events.

For more information about dataflow triggers and the event framework, see Dataflow Triggers Overview.

Event Records

Azure Data Lake Storage (Legacy) event records include the following event-related record header attributes. Record header attributes are stored as String values:

| Record Header Attribute | Description |

|---|---|

| sdc.event.type | Event type. Uses one of the following types:

|

| sdc.event.version | Integer that indicates the version of the event record type. |

| sdc.event.creation_timestamp | Epoch timestamp when the stage created the event. |

- File closure

- The destination generates a file closure event record when it closes an output file.

- Whole file processed

- The

destination generates an event record when it completes

streaming a whole file. Whole file event records have the

sdc.event.typerecord header attribute set towholeFileProcessedand have the following fields:Field Description sourceFileInfo A map of attributes about the original whole file that was processed. The attributes include: - size - Size of the whole file in bytes.

Additional attributes depend on the information provided by the origin system.

targetFileInfo A map of attributes about the whole file written to the destination. The attributes include: - path - An absolute path to the processed whole file.

checksum Checksum generated for the written file. Included only when you configure the destination to include checksums in the event record.

checksumAlgorithm Algorithm used to generate the checksum. Included only when you configure the destination to include checksums in the event record.

Data Formats

- Avro

- The destination writes records based on the Avro schema. You can use one of the following methods to specify the location of the Avro schema definition:

- Binary

- The stage writes binary data to a single field in the record.

- Delimited

- The destination writes records as delimited data. When you use this data format, the root field must be list or list-map.

- JSON

- The destination writes records as JSON data. You can use one of

the following formats:

- Array - Each file includes a single array. In the array, each element is a JSON representation of each record.

- Multiple objects - Each file includes multiple JSON objects. Each object is a JSON representation of a record.

- Protobuf

- Writes a batch of messages in each file.

- Text

- The destination writes data from a single text field to the destination system. When you configure the stage, you select the field to use.

- Whole File

- Streams whole files to the destination system. The destination writes the data to the file and location defined in the stage. If a file of the same name already exists, you can configure the destination to overwrite the existing file or send the current file to error.

Configuring an Azure Data Lake Storage (Legacy) Destination

Configure an Azure Data Lake Storage (Legacy) destination to write data to Microsoft Azure Data Lake Storage Gen1. Be sure to complete the necessary prerequisites before you configure the destination.

-

In the Properties panel, on the General tab, configure the

following properties:

General Property Description Name Stage name. Description Optional description. Stage Library Library version that you want to use. Produce Events Generates event records when events occur. Use for event handling. Required Fields Fields that must include data for the record to be passed into the stage. Tip: You might include fields that the stage uses.Records that do not include all required fields are processed based on the error handling configured for the pipeline.

Preconditions Conditions that must evaluate to TRUE to allow a record to enter the stage for processing. Click Add to create additional preconditions. Records that do not meet all preconditions are processed based on the error handling configured for the stage.

-

On the Data Lake tab, configure the following

properties:

Data Lake Property Description Application ID Azure Application ID. The Application ID for the Data Collector web application created in Active Directory.

For help locating this information in Azure, see Step 2. Retrieve Information from Azure.

Auth Token Endpoint Azure OAuth 2.0 token endpoint URL for the Data Collector web application. For help locating this information in Azure, see Step 2. Retrieve Information from Azure.

Account FQDN The host name of the Data Lake Storage account. For example: <service name>.azuredatalakestore.netFor help locating this information in Azure, see Step 2. Retrieve Information from Azure.

Application Key The Application Key of the Data Collector web application created in Active Directory. For help locating this information in Azure, see Step 2. Retrieve Information from Azure.

-

On the Output Files tab, configure the following

properties:

Output Files Property Description Files Suffix Suffix to use for output files, such as txtorjson. When used, the destination adds a period and the configured suffix as follows:<filename>.<suffix>.You can include periods within the suffix, but do not start the suffix with a period. Forward slashes are not allowed.

Not available for the whole file data format.

Directory Template Template for creating output directories. You can use constants, field values, and datetime variables. Output directories are created based on the smallest datetime variable in the template.

Files Prefix Prefix to use for output files. Use when writing to a directory that receives files from other sources. Uses the prefix

sdc-${sdc:id()}by default. The prefix evaluates tosdc-<Data Collector ID>.The Data Collector ID is stored in the following file: $SDC_DATA/sdc.id. For more information about environment variables, see Data Collector Environment Configuration in the Data Collector documentation.

Directory in Header Indicates that the target directory is defined in record headers. Use only when the targetDirectory header attribute is defined for all records. Data Time Zone Time zone for the destination system. Used to resolve datetimes in the directory template and evaluate where records are written. Time Basis Time basis to use for creating output directories and writing records to the directories. Use one of the following expressions: ${time:now()}- Uses the processing time as the time basis.${record:value(<date field path>)}- Uses the time associated with the record as the time basis.

Max Records in File Maximum number of records written to an output file. Additional records are written to a new file. Use 0 to opt out of this property.

Not available when using the whole file data format.

Max File Size (MB) Maximum size of an output file. Additional records are written to a new file. Use 0 to opt out of this property.

Not available when using the whole file data format.

Idle Timeout Maximum time that an output file can remain idle. After no records are written to a file for this amount of time, the destination closes the file. Enter a time in seconds or use the MINUTESorHOURSconstant in an expression to define the time increment.Use -1 to set no limit. Default is 1 hour, defined as follows:

${1 * HOURS}.Not available when using the whole file data format.

Use Roll Attribute Checks the record header for the roll header attribute and closes the current file when the roll attribute exists. Can be used with Max Records in a File and Max File Size to close files.

Roll Attribute Name Name of the roll header attribute. Default is roll.

Validate Directory Permissions When you start the pipeline, the destination tries writing to the configured directory template to validate permissions. The pipeline does not start if validation fails. Note: Do not use this option when the directory template uses expressions to represent the entire directory. -

On the Data Format tab, configure the following

property:

Data Format Property Description Data Format Format of data to be written. Use one of the following options: - Avro

- Binary

- Delimited

- JSON

- Protobuf

- Text

- Whole File

-

For Avro data, on the Data Format tab, configure the

following properties:

Avro Property Description Avro Schema Location Location of the Avro schema definition to use when writing data: - In Pipeline Configuration - Use the schema that you provide in the stage configuration.

- In Record Header - Use the schema in the avroSchema record header attribute. Use only when the avroSchema attribute is defined for all records.

- Confluent Schema Registry - Retrieve the schema from Confluent Schema Registry.

Avro Schema Avro schema definition used to write the data. You can optionally use the

runtime:loadResourcefunction to load a schema definition stored in a runtime resource file.Register Schema Registers a new Avro schema with Confluent Schema Registry. Schema Registry URLs Confluent Schema Registry URLs used to look up the schema or to register a new schema. To add a URL, click Add and then enter the URL in the following format: http://<host name>:<port number>Basic Auth User Info User information needed to connect to Confluent Schema Registry when using basic authentication. Enter the key and secret from the

schema.registry.basic.auth.user.infosetting in Schema Registry using the following format:<key>:<secret>Tip: To secure sensitive information such as user names and passwords, you can use runtime resources or credential stores. For more information about credential stores, see Credential Stores in the Data Collector documentation.Look Up Schema By Method used to look up the schema in Confluent Schema Registry: - Subject - Look up the specified Avro schema subject.

- Schema ID - Look up the specified Avro schema ID.

Schema Subject Avro schema subject to look up or to register in Confluent Schema Registry. If the specified subject to look up has multiple schema versions, the destination uses the latest schema version for that subject. To use an older version, find the corresponding schema ID, and then set the Look Up Schema By property to Schema ID.

Schema ID Avro schema ID to look up in Confluent Schema Registry. Include Schema Includes the schema in each file. Note: Omitting the schema definition can improve performance, but requires the appropriate schema management to avoid losing track of the schema associated with the data.Avro Compression Codec The Avro compression type to use. When using Avro compression, do not enable other compression available in the destination.

-

For binary data, on the Data Format tab, configure the

following property:

Binary Property Description Binary Field Path Field that contains the binary data. -

For delimited data, on the Data Format tab, configure the

following properties:

Delimited Property Description Delimiter Format Format for delimited data: - Default CSV - File that includes comma-separated values. Ignores empty lines in the file.

- RFC4180 CSV - Comma-separated file that strictly follows RFC4180 guidelines.

- MS Excel CSV - Microsoft Excel comma-separated file.

- MySQL CSV - MySQL comma-separated file.

- Tab-Separated Values - File that includes tab-separated values.

- PostgreSQL CSV - PostgreSQL comma-separated file.

- PostgreSQL Text - PostgreSQL text file.

- Custom - File that uses user-defined delimiter, escape, and quote characters.

Header Line Indicates whether to create a header line. Delimiter Character Delimiter character for a custom delimiter format. Select one of the available options or use Other to enter a custom character. You can enter a Unicode control character using the format \uNNNN, where N is a hexadecimal digit from the numbers 0-9 or the letters A-F. For example, enter \u0000 to use the null character as the delimiter or \u2028 to use a line separator as the delimiter.

Default is the pipe character ( | ).

Record Separator String Characters to use to separate records. Use any valid Java string literal. For example, when writing to Windows, you might use \r\n to separate records. Available when using a custom delimiter format.

Escape Character Escape character for a custom delimiter format. Select one of the available options or use Other to enter a custom character. Default is the backslash character ( \ ).

Quote Character Quote character for a custom delimiter format. Select one of the available options or use Other to enter a custom character. Default is the quotation mark character ( " ).

Replace New Line Characters Replaces new line characters with the configured string. Recommended when writing data as a single line of text.

New Line Character Replacement String to replace each new line character. For example, enter a space to replace each new line character with a space. Leave empty to remove the new line characters.

Charset Character set to use when writing data. -

For JSON data, on the Data Format tab, configure the

following properties:

JSON Property Description JSON Content Method to write JSON data: - JSON Array of Objects - Each file includes a single array. In the array, each element is a JSON representation of each record.

- Multiple JSON Objects - Each file includes multiple JSON objects. Each object is a JSON representation of a record.

Charset Character set to use when writing data. -

For protobuf data, on the Data Format tab, configure the

following properties:

Protobuf Property Description Protobuf Descriptor File Descriptor file (.desc) to use. The descriptor file must be in the Data Collector resources directory, $SDC_RESOURCES.For more information about environment variables, see Data Collector Environment Configuration in the Data Collector documentation. For information about generating the descriptor file, see Protobuf Data Format Prerequisites.

Message Type Fully-qualified name for the message type to use when writing data. Use the following format:

Use a message type defined in the descriptor file.<package name>.<message type>. -

For text data, on the Data Format tab, configure the

following properties:

Text Property Description Text Field Path Field that contains the text data to be written. All data must be incorporated into the specified field. Record Separator Characters to use to separate records. Use any valid Java string literal. For example, when writing to Windows, you might use \r\n to separate records. By default, the destination uses \n.

On Missing Field When a record does not include the text field, determines whether the destination reports the missing field as an error or ignores the missing field. Insert Record Separator if No Text When configured to ignore a missing text field, inserts the configured record separator string to create an empty line. When not selected, discards records without the text field.

Charset Character set to use when writing data. -

For whole files, on the Data Format tab, configure the

following properties:

Whole File Property Description File Name Expression Expression to use for the file names.

For tips on how to name files based on input file names, see Writing Whole Files.

Permissions Expression Expression that defines the access permissions for output files. Expressions should evaluate to a symbolic or numeric/octal representation of the permissions you want to use. By default, with no specified expression, files use the default permissions of the destination system.

To use the original source file access permissions, use the following expression:${record:value('/fileInfo/permissions')}File Exists Action to take when a file of the same name already exists in the output directory. Use one of the following options: - Send to Error - Handles the record based on stage error record handling.

- Overwrite - Overwrites the existing file.

Include Checksum in Events Includes checksum information in whole file event records. Use only when the destination generates event records.

Checksum Algorithm Algorithm to generate the checksum.