MySQL Binary Log

The MySQL Binary Log origin processes change data capture (CDC) information provided by MySQL server in binary logs. For information about supported versions, see Supported Systems and Versions.

The MySQL Binary Log origin acts as a MySQL replication replica. MySQL replication allows you to maintain multiple copies of MySQL data by copying the data from a source to a replica server. The origin uses the replication process to capture changes from the MySQL source database and then pass the changed data to a Data Collector pipeline.

The origin can process binary logs from a MySQL server that has Global Transaction Identifier (GTID) enabled or disabled. A GTID is a unique transaction ID assigned to every transaction that happens in the MySQL server database.

MySQL Binary Log processes only change capture data. The origin creates records for the INSERT, UPDATE, and DELETE operations. The origin produces one record for each change noted in the binary logs.

The generated record includes a map of fields of CDC information. It also includes the CRUD operation type in a record header attribute so generated records can be easily processed by CRUD-enabled destinations. For an overview of Data Collector changed data processing and a list of CRUD-enabled destinations, see Processing Changed Data.

When needed, you can use a JDBC Query Consumer or a JDBC Multitable Consumer in a separate pipeline to read existing data from the MySQL database before you start a pipeline with a MySQL Binary Log origin. When necessary, you should also configure the MySQL Binary Log pipeline to modify the generated records. Of the CRUD-enabled destinations, only the Kudu and JDBC Producer destinations include a Change Log Format property that enables processing MySQL Binary Log records as generated.

Before you configure the origin, you must complete several prerequisites.

When you configure MySQL Binary Log, you configure the database server from which to read the log files. You can configure the origin to read the binary log files from the beginning, or you can specify an offset to determine where the origin starts reading data.

You specify the credentials to use to connect to MySQL server. You can also configure advanced properties, such as Keep Alive thread properties, and tables that the origin includes or ignores during processing.

Prerequisites

Configure MySQL Server for Row-based Logging

The MySQL Binary Log origin can process binary logs from a MySQL server configured to use row-based logging.

Before you use the origin, verify that the binary log format for the MySQL server is set to ROW. For instructions, see Setting the Binary Log Format in the MySQL documentation.

Enable the Required Authentication

caching_sha2_password- The default authentication plugin for MySQL 8.0 and later.mysql_native_password- The default authentication plugin for earlier MySQL versions.

To use the MySQL Binary Log origin, the MySQL account specified in the origin must use one of these required authentication plugins.

When the MySQL server uses a different authentication plugin, you can configure a user account to use one of the required plugins.

caching_sha2_password plugin:

ALTER USER '<user>'@'<database>' IDENTIFIED WITH caching_sha2_password BY '<password>'Specify the user name, database name, and password to use. Then, use these details when you configure the origin.

For more information about the authentication plugins, see the MySQL documentation.

Set the Row Metadata Property

MySQL 8.0 and later versions provide a binlog_row_metadata property that

defines the level of table metadata to include in the binary log.

To allow the MySQL Binary Log origin to properly handle schema changes, such as a change

in column order, set the binlog_row_metadata property to

FULL.

Earlier MySQL versions do not include the binlog_row_metadata property.

As a result, when reading from MySQL 7.x or earlier, the MySQL Binary Log origin can

handle some types of schema changes, but not all.

Install the JDBC Driver

Before you use the MySQL Binary Log origin, install the MySQL JDBC driver. You cannot access the database until you install the required driver.

You install the driver into the MySQL Binary Log stage library, streamsets-datacollector-mysql-binlog-lib, which includes the origin.

To use the JDBC driver

with multiple stage libraries, install the driver into each stage library associated

with the stages.

For example, if you want to use a MySQL JDBC driver with the JDBC Lookup processor

and with the MySQL Binary Log origin, you install the driver as an external library

for the JDBC stage library, streamsets-datacollector-jdbc-lib, and for the MySQL Binary Log stage library, streamsets-datacollector-mysql-binlog-lib.

For information about installing additional drivers, see Install External Libraries.

Initial Offset

You can configure the origin to start reading the binary log file from the beginning of the file or from an initial offset in the file.

The initial offset is the point in the binary log file where you want to start processing. When you start the pipeline, the MySQL Binary Log origin starts processing from the initial offset and continues until you stop the pipeline.

The format that you use to configure the initial offset depends on whether MySQL server is enabled for Global Transaction Identifiers (GTID):

- GTID enabled

-

When the database is enabled for GTID, the MySQL Binary Log origin expects the initial offset to contain the range of transaction IDs to exclude from being processed. Use the following format to configure the offset:

<source ID>:1-<last transaction ID to exclude>For example, to begin reading the binary log file at the GTID8720bebf-bb38-11e6-97b1-0242ac110004:7066, enter8720bebf-bb38-11e6-97b1-0242ac110004:1-7065for the offset.Note: If you enter a single transaction ID for the offset, the origin skips that transaction ID only, reading all other transaction IDs. For example, if you enter8720bebf-bb38-11e6-97b1-0242ac110004:7065for the offset, the origin reads transaction IDs1through7064, skips7065, then begins reading again at7066. - GTID disabled

- When the database is disabled for GTID, the MySQL Binary Log origin expects the

initial offset to contain both the binary log file name and a position within

the file. Use the following format to configure the

offset:

<binary log file name>:<position>

When a pipeline stops, the MySQL Binary Log origin notes the offset where it stops reading. When the pipeline starts again, the origin continues processing from the last saved offset. You can reset the origin to process all requested objects.

Generated Records

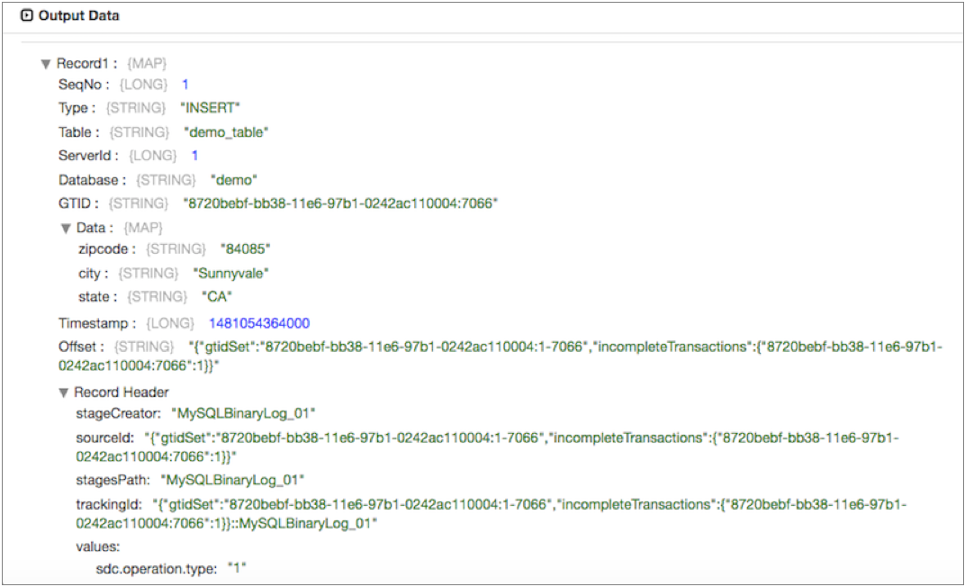

Each generated record includes the following information:

- CRUD operation type

- The CRUD operation type is stored in the Type record field and also in the

sdc.operation.typerecord header attribute. - Primary key information

-

When a table contains a primary key, the origin includes the following record header attribute:

jdbc.primaryKeySpecification- Provides a JSON-formatted string that lists the columns that form the primary key in the table and the metadata for those columns.For example, a table with a composite primary key contains the following attribute:jdbc.primaryKeySpecification = {{"<primary key column 1 name>": {"type": <type>, "datatype": "<data type>", "size": <size>, "precision": <precision>, "scale": <scale>, "signed": <Boolean>, "currency": <Boolean> }}, ..., {"<primary key column N name>": {"type": <type>, "datatype": "<data type>", "size": <size>, "precision": <precision>, "scale": <scale>, "signed": <Boolean>, "currency": <Boolean> } } }A table without a primary key contains the attribute with an empty value:jdbc.primaryKeySpecification = {}

For an update operation on a table with a primary key, the origin includes the following record header attributes:jdbc.primaryKey.before.<primary key column name>- Provides the old value for the specified primary key column.jdbc.primaryKey.after.<primary key column name>- Provides the new value for the specified primary key column.

Note: The origin provides the new and old values of the primary key columns regardless of whether the value changes. - Change data capture information

- The change data capture information is stored in the following record fields:

- BinLogFilename - when GTID is disabled

- BinLogPosition- when GTID is disabled

- SeqNo - when GTID is enabled

- GTID - when GTID is enabled

- Table

- ServerId

- Database

- Timestamp

- Offset

- New data

-

Fields that contain new data to be inserted or updated appear in a Data map field.

- Changed data

- Fields that contain old data to be updated, or fields in a delete record appear in an OldData map field.

For example, the origin generates the following record when it reads a binary log file for a MySQL server with GTID enabled for a table without a primary key:

Processing Generated Records

Due to the structure of the MySQL binary log records, you might want to use processors to restructure the record and remove any unnecessary fields. Of the CRUD-enabled destinations, only the Kudu and JDBC Producer destinations include a Change Log Format property that enables processing MySQL Binary Log records as generated.

For example, MySQL binary log records include CDC data in record fields. If you are using a destination other than Kudu and all you need is record data, you can use a Field Remover to remove the unwanted fields.

Similarly, update records include the previous data in an OldData map field, and the updated data in a Data map field. If all you need is the updated data, you can use the Field Remover to drop the OldData field from the record. And you could use a Field Flattener processor to flatten the fields in the Data field.

Tables to Include or Ignore

The binary log file captures all changes made to the MySQL database. If you want the MySQL Binary Log origin to capture changes from a subset of tables, you can configure the origin to include changes from specific tables or to ignore changes from specific tables.

<database name>.<table name>sales.orders,sales.customersIf you enter the same value in the Ignore Tables property, the origin captures changes from all tables in the log file, except for the orders and customers tables in the sales database.

The Ignore Tables property takes precedence. If you include a table in both properties, the origin ignores the table.

MySQL Data Types

The MySQL Binary Log origin converts MySQL data types into Data Collector data types.

| MySQL Data Type | Data Collector Data Type |

|---|---|

| Bigint, Bigint Unsigned | Long |

| Binary, Blob | Byte Array |

| Char | String |

| Date | Date |

| Datetime | Datetime |

| Decimal | Decimal |

| Double | Double |

| Enum | String |

| Float | Float |

| Int | Integer |

| Int Unsigned | Long |

| Json | String |

| Linestring, Point, Polygon | String |

| Medium Int | Integer |

| Medium Int Unsigned | Long |

| Numeric | Decimal |

| Set | String |

| Smallint, Smallint Unsigned | Integer |

| Text | String |

| Time | Time |

| Timestamp | Datetime |

| Tinyint, Tinyint Unsigned | Integer |

| Varbinary | Byte Array |

| Varchar | String |

| Year | Integer |

Configuring a MySQL Binary Log Origin

Configure a MySQL Binary Log origin to process change data capture (CDC) information provided by MySQL binary logs. Be sure to complete the necessary prerequisites before you configure the origin.

-

In the Properties panel, on the General tab, configure the

following properties:

General Property Description Name Stage name. Description Optional description. On Record Error Error record handling for the stage: - Discard - Discards the record.

- Send to Error - Sends the record to the pipeline for error handling.

- Stop Pipeline - Stops the pipeline.

-

On the MySQL Binary Log tab, configure the following

properties:

MySQL Binary Log Property Description JDBC Connection String JDBC connection string to connect. Use the following format: jdbc:mysql://<host>:<port>/<database>?<param1>=<value1>&<param2>=<value2>The database name and additional parameters are optional.

For example, the following JDBC connection strings are all valid:

jdbc:mysql://example.com:3306jdbc:mysql://example.com:3306/myDB?useSSL=truejdbc:mysql://example.com:3306/myDB?requireSSL=true&profileSQL=trueServer ID Replication server ID that the origin uses to connect to the source MySQL server. Must be unique from the server ID of the replication source and of all the other replication replicas. When the MySQL server database is enabled for GTID, the server ID is optional.

Start from Beginning Specifies whether to start reading events from the beginning of the binary log. When not selected, the origin begins reading events from the last saved offset. Initial Offset Read events starting at this offset in the binary log. If you configure an initial offset value and configure the origin to start from the beginning, then the origin starts reading from the initial offset.

Use Credentials Enables entering credentials on the Credentials tab. Use when you do not include credentials in the JDBC connection string.

-

On the Credentials tab, configure the following

properties:

Credentials Property Description Username MySQL username. The user account must have the following privileges:- Replication Client

- Replication Replica

- Select on included tables

Password MySQL password. Tip: To secure sensitive information such as user names and passwords, you can use runtime resources or credential stores. -

On the Advanced tab, configure the following

properties:

Advanced Property Description Max Batch Wait Time (ms) Number of milliseconds to wait before sending a partial or empty batch. Max Batch Size (records) Maximum number of records processed at one time. Honors values up to the Data Collector maximum batch size. Default is 1000. The Data Collector default is 1000.

Connection Timeout (ms) Maximum time in milliseconds to wait for a connection to the MySQL server. Enable KeepAlive Thread Enables using a Keep Alive thread to maintain the connection to the MySQL server. KeepAlive Interval Maximum number of milliseconds to keep an idle Keep Alive thread active before closing the thread. Include Tables List of tables to include when reading change events in the binary log file. Ignore Tables List of tables to ignore when reading change events in the binary log file.