Data Collector Communication

StreamSets Control Hub works with Data Collector to design pipelines and to execute standalone and cluster pipelines.

Control Hub runs on a public cloud service hosted by StreamSets - you simply need an account to get started. You install Data Collectors in your corporate network, which can be on-premises or on a protected cloud computing platform, and then register them to work with Control Hub.

- Authoring Data Collector

- Use an authoring Data Collector to design pipelines and to create connections. You can design pipelines in the Control Hub after selecting an available authoring Data Collector. The selected authoring Data Collector determines the stages, stage libraries, and functionality that display in Pipeline Designer.

- Execution Data Collector

- Use an execution Data Collector to execute standalone and cluster pipelines run from Control Hub jobs.

A single Data Collector can serve both purposes. However, StreamSets recommends dedicating each Data Collector as either an authoring or execution Data Collector.

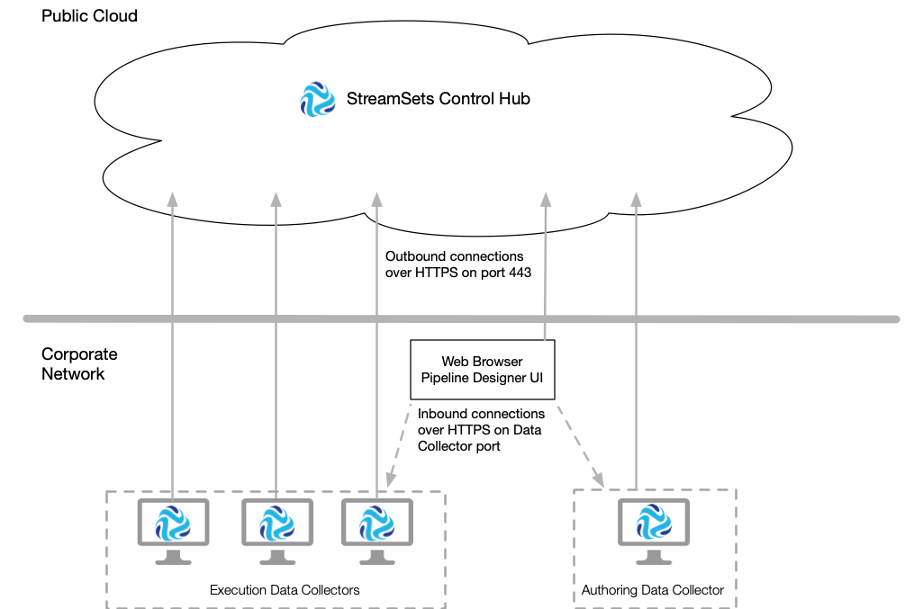

Registered Data Collectors use encrypted REST APIs to communicate with Control Hub. Data Collectors initiate outbound connections to Control Hub over HTTPS on port number 443.

The web browser that accesses Control Hub Pipeline Designer uses encrypted REST APIs to communicate with Control Hub. The web browser initiates outbound connections to Control Hub over HTTPS on port number 443.

The authoring Data Collector selected for Pipeline Designer or connection creation accepts inbound connections from the web browser on the port number configured for the Data Collector. Similarly, the execution Data Collector accepts inbound connections from the web browser when you monitor real-time summary statistics, error information, and snapshots for active jobs. The connections must be HTTPS.

The following image shows how authoring and execution Data Collectors communicate with Control Hub:

Data Collector Requests

Registered Data Collectors send requests and information to Control Hub.

Control Hub does not directly send requests to Data Collectors. Instead, Control Hub sends requests using encrypted REST APIs to a messaging queue managed by Control Hub. Data Collectors periodically check with the queue to retrieve Control Hub requests.

Data Collectors communicate with Control Hub in the following areas:

- Pipeline management

- When you use an authoring Data Collector to publish a pipeline to Control Hub, the Data Collector sends the request to Control Hub.

- Connections

- When you start a job for a pipeline that uses a connection, the execution Data Collector requests the connection properties from Control Hub.

- Jobs

- Every minute, Data Collectors send a heartbeat, the last-saved offsets, and the status of all remotely

running pipelines to Control Hub so that Control Hub can manage job execution.Note: Data Collector version 3.13.0 and earlier sends this information to the messaging queue.

- Security

- When you enable Control Hub within a Data Collector or when a user logs into a registered Data Collector, the Data Collector makes an authentication request to Control Hub.

- Metrics

- Every minute, an execution Data Collector sends aggregated metrics for remotely running pipelines to Control Hub.

- Messaging queue

- Data Collectors send the following information to the messaging queue:

- At startup, a Data Collector sends the following information: Data Collector version, URL of the Data Collector, and labels configured in the Control Hub configuration file, $SDC_CONF/dpm.properties.

- When you update permissions on local pipelines, the Data Collector sends the updated pipeline permissions.