What's New

What's New in 3.57.x

StreamSets Control Hub version 3.57.x, released in April 2024, includes an enhancement and several fixed issues.

Enhancement

- MariaDB support

- Control Hub supports MariaDB version 10.11 for the relational database that stores metadata written by Control Hub applications.

Fixed Issues

- Scheduled tasks sporadically fail to start, sometimes displaying a

Connection is closederror in the run history. (20158, 20129) - The Update Stage Libraries dialog box does not save updates. (19532, 20115)

What's New in 3.56.x

StreamSets Control Hub version 3.56.x, released in March 2024, includes several enhancements.

Enhancements

This release includes the following enhancements:

- MySQL support

-

Control Hub supports MySQL version 8.x for the relational database that stores metadata written by Control Hub applications.

Control Hub continues to support MySQL 5.6 and 5.7. However, these earlier MySQL versions have reached end of life. After you upgrade Control Hub to version 3.56.x, you might consider upgrading to MySQL 8.x as a post-upgrade task.

- Updated configuration files

-

The

$DPM_CONF/jobrunner-app.propertiesconfiguration file includes the following new properties that determine how Control Hub purges orphaned pipeline offsets:enable.orphan.pipeline.offsets.purgeorphan.offsets.purge.freq.minutesorphan.offsets.purge.init.delay.minutes

The$DPM_CONF/timeseries-app.propertiesconfiguration file includes the following new properties that determine how Control Hub purges temporary job metrics:enable.tmp.metrics.purgetmp.metrics.purge.init.delay.minutestmp.metrics.purge.freq.minutestmp.metrics.purge.batch.size

Important: StreamSets recommends that you keep the default values for all of these properties.

What's New in 3.55.x

StreamSets Control Hub version 3.55.x, released in December 2023, includes several enhancements.

Enhancements

This release includes the following enhancements:

- Support bundles

- System administrators can generate a support bundle for Control Hub. A support bundle is a ZIP file that includes the Control Hub log file, environment and configuration information, organization information, and other details to help troubleshoot issues.

- Updated configuration files

-

The

$DPM_CONF/dpm.propertiesconfiguration file includes the new propertybundle.polling.period.secondsthat determines how often Control Hub checks for requests to generate a support bundle.The$DPM_CONF/security-app.propertiesconfiguration file includes the following new properties that determine how Control Hub generates a support bundle:dpm.bundle.generation.timeout.secondsdpm.bundle.order.cleanup.periodicity.secondsdpm.bundle.order.cleanup.task.timeout.threshold.secondsdpm.bundle.max.orders.per.batch.in.cleanup

The$DPM_CONF/jobrunner-app.propertiesconfiguration file includes the following new properties that determine how Control Hub purges job, job status, and job status history information:enable.job.status.purge.when.job.startsenable.job.status.history.purge.when.history.addedjob.pre.deleter.max.status.batches.per.jobjob.pre.deleter.max.history.batches.per.statusjob.pre.deleter.status.batch.sizejob.pre.deleter.history.batch.sizejob.pre.deleter.max.offset.batches.per.statusjob.pre.deleter.offset.batch.sizejob.pipeline.offsets.purge.batch.size

What's New in 3.54.x

StreamSets Control Hub version 3.54.x, released in October 2023, includes several enhancements.

Enhancements

This release includes the following enhancements:

- Authoring engine timeout

- By default, Control Hub waits five seconds for a response from an authoring engine before considering the engine as inaccessible. An organization administrator can modify the default value in the organization properties.

- Alerts

- When the alert text defined for a data SLA or

pipeline alert exceeds 255 characters, Control Hub truncates the text

to 244 characters and adds a

[TRUNCATED]suffix to the text so that the triggered alert is visible when you click the Alerts icon in the top toolbar.

What's New in 3.53.x

StreamSets Control Hub version 3.53.x, released in May 2023, includes several enhancements and behavior changes.

Enhancements

This release includes the following enhancements:

- Pipeline and fragment export

- Updated configuration files

- The

$DPM_CONF/jobrunner-app.propertiesconfiguration file includes the following new properties that determine how Control Hub maintains the last-saved offsets when balancing or synchronizing Data Collector jobs:offset.migration.timeout.millisoffset.migration.task.periodicity.secondsoffset.migration.task.initial.delay.seconds

Behavior Changes

This release includes the following behavior changes:

- StreamSets Data Collector Edge

-

StreamSets has paused all development on Data Collector Edge and is not supporting any active customer installations of Data Collector Edge. As a result, you can no longer download and register Data Collector Edge for use with Control Hub.

- Balancing and synchronizing jobs

- When you balance or synchronize a Data Collector job, Control Hub now temporarily stops the job to ensure that the last-saved offset from each pipeline instance is maintained. Control Hub then reassigns the pipeline instances to Data Collectors and restarts the job.

What's New in 3.52.x

StreamSets Control Hub version 3.52.x, released in April 2023, includes the following enhancement:

- Updated configuration files

- The

$DPM_CONF/jobrunner-app.propertiesconfiguration file includes the following new properties:enable.job.without.runs.purge- Automatically deletes inactive job instances older than the specified number days that have never been run. Default is false, which means that Control Hub indefinitely retains inactive job instances with no job runs.purge.old.jobs.without.runs.days- Number of days that an inactive job instance that has never been run can exist before it is automatically deleted. Default is 365 days.

What's New in 3.51.x

StreamSets Control Hub version 3.51.x, released in November 2022, includes the following new features:

- Job status

- Jobs that have not run are listed with an Inactive status. Previously, jobs that had not run were listed without a status.

- Control Hub logs

-

Control Hub uses the Apache Log4j 2.17.2 library to write log data. In the previous release, Control Hub used the Apache Log4j 2.17.1 library.

- Updated configuration files

- The configuration file for each Control Hub application, such as

$DPM_CONF/connection-app.propertiesor$DPM_CONF/jobrunner-app.properties, includes a newdpm.app.max.event.queue.sizeproperty that determines the maximum number of events to store in memory. In most cases, the default value should work.

What's New in 3.50.x

StreamSets Control Hub version 3.50.x, released in May 2022, includes the following new features:

- Control Hub logs

- Control Hub uses the Apache Log4j 2.17.1 library to write log data. In previous releases, Control Hub used the Apache Log4j 1.x library which is now end-of-life. This can have upgrade impact.

- Starting multiple scheduled jobs at the same time

- When you start multiple jobs at the exact same time using the scheduler, the

number of pipelines running on an engine can exceed the Max Running Pipeline

Count configured for the engine.

If exceeding the resource threshold is not acceptable, you can enable an organization property that synchronizes the start of multiple scheduled jobs. However, be aware that enabling the property can cause scheduled jobs to take longer to start.

- Updated configuration files

-

The

$DPM_CONF/dpm-log4j.propertiesconfiguration file has been renamed todpm-log4j2.propertiesand now uses the Log4j 2.x syntax. This can have upgrade impact.The

$DPM_CONF/messaging-app.propertiesconfiguration file includes several new properties that enhance the log messages about Control Hub and engine communication. In most cases, the default values should work.The

$DPM_CONF/timeseries-app.propertiesconfiguration file includes several new InfluxDB properties. Do not modify these property values.

What's New in 3.25.x

StreamSets Control Hub version 3.25.x, released in March 2022, includes the following new features:

- Scheduled tasks

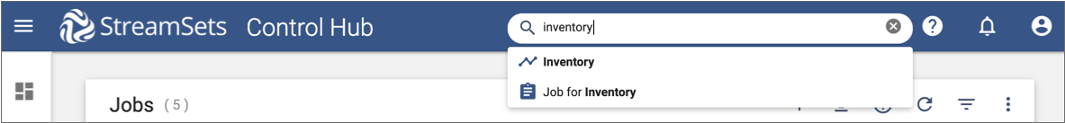

- While creating a scheduled task, you can search for the job to schedule.

- SDC_ID subscription parameter displays as Engine ID in the user interface

- When you create a subscription and define a simple condition for an execution engine not responding event, the

SDC_IDparameter now displays asEngine IDin the UI, instead ofSDC_ID. TheEngine IDlabel indicates that the parameter can include the ID of any engine type.

What's New in 3.24.x

StreamSets Control Hub version 3.24.x, released in February 2022, includes the following new feature:

- Control Hub REST API

-

When you use the Control Hub Job Runner REST API to return all jobs, you can now use additional parameters to search for jobs by name. You can search for jobs with names that equal the specified search text or search for jobs with names that start with the specified search text.

What's New in 3.23.x

StreamSets Control Hub version 3.23.x, released in December 2021, includes the following new features and enhancements:

- Provisioned Data Collectors

- StreamSets has certified provisioning Data Collectors to AWS Fargate with Amazon Elastic Kubernetes Service (EKS). When using AWS Fargate with EKS, you must add additional attributes to the deployment YAML specification file.

- Jobs

- By default when a job encounters an inactive error status, users must acknowledge the error message before the job can be restarted.

- Pipeline preview

- By default, Control Hub does not save the preview record schema in the pipeline configuration. When you close and then reopen a pipeline, the Schema tab for each pipeline stage is empty. You must run preview again to update the input and output schema.

- Updated Configuration Files

- The

$DPM_CONF/messaging-app.propertiesconfiguration file includes the following new properties that determine how events are purged from the Messaging application. In most cases, the default values should work:event.use.received.time.seconds.nanosevent.expiry.delete.task.enabledevent.expiry.delete.task.initial.delay.millisevent.expiry.delete.task.frequency.millisevent.expiry.delete.task.chunkevent.expiry.secondsevent.legacy.delete.task.enabledevent.legacy.delete.task.initial.delay.millisevent.legacy.delete.task.frequency.millisevent.legacy.delete.task.chunk

What's New in 3.22.x

StreamSets Control Hub version 3.22.x, released in July 2021, includes the following new features and enhancements:

- Jobs

-

When you enable a Data Collector or Transformer job for pipeline failover, you can configure the global number of pipeline failover retries to attempt across all available engines. When the limit is reached, Control Hub stops the job.

- Subscriptions

-

You can configure a subscription action for a maximum global failover retries exhausted event. For example, you might create a subscription that sends an alert to a Slack channel when a job has exceeded the maximum number of pipeline failover retries across all available engines.

- SAML Authentication

- As a best practice, Control Hub provides a SAML backdoor that allows administrators to log in using Control Hub credentials if the SAML IdP is incorrectly configured and users cannot log in using SAML authentication. SAML providers recommend having a backdoor available for administrators to use to access a locked system.

- Organizations

- The Control Hub system administrator can limit the number of objects retrieved by API requests by clearing the Disable API Offset and Length Checks organization property. When selected, a single API request can return all results. Clear the property if API requests consume a large number of resources. In most cases, you do not need to modify this property.

- PostgreSQL Support

-

Starting in version 3.22.3, Control Hub supports PostgreSQL version 11.10 for the relational database that stores metadata written by Control Hub applications.

- Updated Configuration Files

- The $DPM_CONF/common-to-all-apps.properties configuration

file includes the following new properties which you can modify to determine the

maximum number of results a single API request can return:

dpm.api.pagination.parameters.check.enableddpm.api.default.len.valuedpm.api.max.len.value

What's New in 3.21.x

StreamSets Control Hub version 3.21.x, released in April 2021, includes the following new features and enhancements:

- Pipeline Design

-

- Undo and Redo icons - The toolbar above the pipeline canvas includes an Undo icon to revert recent changes, and a Redo icon to restore changes that were reverted.

- Copy and paste multiple stages and fragments - You can

select multiple stages and fragments, copy them to the clipboard, and

then paste the stages and fragments into the same pipeline or into

another pipeline.

Previously, you could duplicate a single stage or fragment within the same pipeline only.

- Scheduled Tasks

- By default, Control Hub retains the details for scheduled task runs for 30 days or for a maximum of 100 runs, and then purges them. You can change these values by modifying the organization configuration properties. Previously, Control Hub retained the details for scheduled task runs indefinitely.

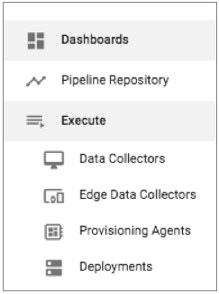

- Execution Engines

- When you monitor registered Data Collectors and Transformers

from the Execute view, you can access the following information for each

execution engine:

- Support bundle - Allows you to generate a support bundle, or archive file, with the information required to troubleshoot various issues with the engine.

- Logs - Displays the log data for the engine. You can download the log and modify the log level when needed.

- Directories - Lists the directories that the engine uses.

- Health Inspector - Displays information about the basic health of the Data Collector. Health Inspector provides a snapshot of how the Data Collector JVM, machine, and network are performing. Available for Data Collector engines only.

- Updated Configuration Files

-

Starting in version 3.21.1, the

$DPM_CONF/dpm.propertiesconfiguration file includes a new property,ui.userflow.key. Do not define a value for this property.

What's New in 3.20.x

StreamSets Control Hub version 3.20.x, released in February 2021, includes the following new features and enhancements:

- Dashboards

- The Dashboards view includes the following dashboards:

- Alerts Dashboard - Provides a summary of triggered alerts, jobs with errors, offline execution engines, and unhealthy engines that have exceeded their resource thresholds. Use to monitor and troubleshoot jobs.

- Topologies Dashboard - Provides a summary of the number of pipelines, jobs, topologies, and execution engines that you have access to. The Topologies Dashboard was previously named the Default Dashboard.

- Alerts

- Control Hub retains acknowledged data SLA and pipeline alerts for 30 days, and then purges them. Previously, Control Hub retained acknowledged alerts indefinitely.

- Data Collector Pipelines

- When designing Data Collector pipelines using an authoring Data Collector version 3.18.x or later, pipelines and stages include advanced options with default values that should work in most cases. By default, new and upgraded pipelines hide the advanced options. Advanced options can include individual properties or complete tabs.

- Jobs

-

- Pipeline failover for Transformer jobs - You can enable a

Transformer job for pipeline failover for some cluster types when the

job runs on Transformer version 3.17.0 or later. Enable pipeline

failover to prevent Spark applications from failing due to an unexpected

Transformer shutdown. When enabled for failover, Control Hub can

reassign the job to an available backup Transformer.

At this time, you can enable failover for jobs that include pipelines configured to run on an Amazon EMR or Google Dataproc cluster. You cannot enable failover for other cluster types.

- View custom stage metrics for Data Collector jobs - When a Data Collector pipeline includes stages that provide custom metrics, you can view the custom metrics in the Realtime Summary tab as you monitor the job.

- Pipeline failover for Transformer jobs - You can enable a

Transformer job for pipeline failover for some cluster types when the

job runs on Transformer version 3.17.0 or later. Enable pipeline

failover to prevent Spark applications from failing due to an unexpected

Transformer shutdown. When enabled for failover, Control Hub can

reassign the job to an available backup Transformer.

- Reports

- The Reports view includes the following views:

- Reports - Provides a set of predefined reports that give an overall summary of the system. Use these predefined reports to monitor and troubleshoot jobs.

- Report Definitions - Allows you to define a custom data delivery report that provides data processing metrics for a given job or topology. The Report Definitions view was previously named the Reports view.

- Subscriptions

-

- Visible subscription parameters - When you define conditions

to filter events, subscription parameters are now visible in the UI. You

can select a parameter and define its value to create a simple

condition. You can also create advanced conditions using the StreamSets

expression language.

Previously, you had to view the documentation for the list of subscription parameters. In addition, you had to use the expression language to define all conditions, including simple conditions.

- Single event types - Subscriptions have been simplified so

that you can create a subscription for a single event type instead of

for multiple event types. Because you can only define a single action

for a subscription, it’s more practical to create a subscription for a

single event type. In most cases, you’ll want to define a unique action

for each event type.

If you previously created subscriptions for multiple event types, the upgrade process successfully upgrades the subscription and displays each event type. You can continue to use these subscriptions. However, you cannot create new subscriptions for multiple event types.

- Visible subscription parameters - When you define conditions

to filter events, subscription parameters are now visible in the UI. You

can select a parameter and define its value to create a simple

condition. You can also create advanced conditions using the StreamSets

expression language.

- Execution Engines

- When you monitor registered Data Collectors and Transformers

from the Execute view, you can view the following information for each execution

engine:

- Configuration - Lists configuration properties for the engine.

- Thread dump - Lists all active Java threads used by the engine.

- Metrics - Displays metric charts about the engine, such as the CPU usage, threads, and heap memory usage.

- Updated Configuration Files

- The $DPM_CONF/connection-app.properties file includes the

following new properties that determine how entries in the connection audit are

purged:

- enable.connection.audit.purge - Determines whether Control Hub purges connection audit entries.

- connection.audit.purge.age.days - Maximum number of days to retain connection audit entries.

- connection.audit.purge.init.delay.minutes - Number of minutes to wait as an initial delay before checking for connection audit entries to purge.

- connection.audit.purge.freq.minutes - Number of minutes to wait between checks to purge connection audit entries.

What's New in 3.19.x

StreamSets Control Hub version 3.19.x, released in December 2020, includes the following new features and enhancements:

- Connections

- You can create connections to define the information required to access data in external systems. You share the connections with data engineers, and they simply select the appropriate connection name when configuring pipelines and pipeline fragments in Control Hub Pipeline Designer.

- Jobs

- When monitoring a job run on Transformer version 3.16.0 or later, you can view

the contents of the Spark

driver log from the Control Hub UI for the

following types of pipelines:

- Local pipelines

- Cluster pipelines run in Spark standalone mode

- Cluster pipelines run on Kubernetes

- Cluster pipelines run on Hadoop YARN in client deployment mode

- Organization Configuration

- System administrators can now configure the system limit for the execution engine heartbeat interval at the global level for all organizations. The interval determines the maximum number of seconds since the last reported execution engine heartbeat before Control Hub considers the engine as unresponsive.

- New Configuration File

- This release includes a new $DPM_CONF/connection-app.properties configuration file used to configure the Connection application.

- Updated Configuration File

- The $DPM_CONF/jobrunner-app.properties configuration file

no longer includes the

failover.time.secsproperty. Instead, system and organization administrators can configure the execution engine heartbeat interval in the Control Hub UI.

What's New in 3.18.x

StreamSets Control Hub version 3.18.x includes the following new features and enhancements:

- Update Jobs when Publishing a Pipeline

-

When publishing a pipeline, you can update jobs that include the pipeline to use the latest pipeline version. You can also easily create a new job using the latest pipeline version.

- Sample Pipelines

-

"Pipeline templates" are now known as "sample pipelines".

The pipeline repository provides a new Sample Pipelines view that makes it easier to view a sample pipeline to explore how the pipeline and stages are configured. You can duplicate a sample pipeline to use it as the basis for building your own pipeline.

- Pipelines and Pipeline Fragments Views

-

- Filter pipelines and fragments by status - In the Pipelines and Pipeline Fragments views, you can filter the list of pipelines or fragments by status. For example, you can filter the list to display only published pipelines or to display only draft pipelines.

- User who last modified a pipeline or fragment - The Pipelines and Pipeline Fragments views include a column that lists the user who last modified each pipeline or fragment.

- Pipeline Design

-

- Stage library panel display and stage installation for Data Collector

pipelines - The stage library panel in the pipeline canvas

displays all Data Collector stages, instead of only the stages installed on the selected

authoring Data Collector.

Stages that are not installed appear disabled, or greyed out.

When the selected authoring Data Collector is a tarball installation, you can click on a disabled stage to install the stage library that includes the stage on the authoring Data Collector. Previously, you had to log into Data Collector to install additional stage libraries.

- Install external libraries from the properties panel - You can select a stage in the pipeline canvas and then install external libraries for that stage from the properties panel. Previously, you had to log into Data Collector or Transformer to install external libraries.

- View all jobs that include a pipeline version - When viewing a pipeline in Pipeline Designer, you can view the complete list of jobs that include that pipeline version.

- Optional parameter name prefix for fragments - When adding a fragment to a pipeline, you can remove the parameter name prefix. You might remove the prefix when reusing a fragment in a pipeline and you want to use the same values for the runtime parameters in those fragment instances.

- Stage library panel display and stage installation for Data Collector

pipelines - The stage library panel in the pipeline canvas

displays all Data Collector stages, instead of only the stages installed on the selected

authoring Data Collector.

Stages that are not installed appear disabled, or greyed out.

- Jobs

-

When monitoring an active Data Collector or Transformer job, you can view the log for the execution engine running the remote pipeline instance. You can filter the messages by log level or open the log in the execution engine UI.

- Updated Configuration Files

-

The $DPM_CONF/jobrunner-app.properties configuration file includes the following new properties:

jobs.status.monitor.threads- Maximum number of threads used to monitor the status of all jobs.jobs.schedule.threads- Maximum number of threads used to schedule jobs that have not yet started because all available Data Collectors have exceeded the resource thresholds.

What's New in 3.17.x

StreamSets Control Hub version 3.17.x includes the following new features and enhancements:

- Installation Requirements

- Control Hub supports Java 11, in addition to Java 8.

- Pipeline Design

-

- Pipeline test run history - The test run history for a draft pipeline displays the input, output, and error record count for each test run.

- Transformer pipeline validation - You can use Pipeline Designer to validate a Transformer pipeline against the cluster configured to run the pipeline.

- Jobs

-

- Tags - You can assign tags to jobs to identify similar jobs and job templates. Use job tags to easily search and filter jobs and job templates in the Jobs view.

- Pipeline status - The status of remote pipeline instances run from a job is more visible in the Jobs view.

- Job run history - The run history for a job displays the input, output, and error record count for each job run.

- Snapshots

-

- Preview source - You can use snapshot data as the source data when previewing Data Collector pipelines.

- Rename snapshots - You can rename captured snapshots.

- View snapshots after pipeline test run stops - You can view snapshots captured for a pipeline test run after the test run stops.

- Failure snapshots for pipeline test runs - When a pipeline test run fails, Control Hub automatically captures a failure snapshot which you can view to troubleshoot the problem.

- Scheduler

-

- Scheduled task details - The details of a scheduled task include the name and link to the report or job that has been scheduled.

- View audit - When you view the audit of all changes made to a scheduled task, the audit lists the most recent change first.

- Subscriptions

-

You can use the PIPELINE_COMMIT_ID parameter for a subscription trigged by a pipeline committed event.

- Export

-

You can export all pipelines, pipeline fragments, jobs, or topologies by selecting the More > Export All option from the appropriate view.

- UI Improvements

-

- Global search - You can globally search for pipelines, pipeline

fragments, jobs, and topologies by name using the following search field

in the top toolbar:

- Pagination - All views except for the Scheduler view display long lists over multiple pages.

- Global search - You can globally search for pipelines, pipeline

fragments, jobs, and topologies by name using the following search field

in the top toolbar:

- Roles

-

The default system organization with an ID of

adminincludes a new License Administrator role that allows a user to complete limited administrative tasks across all organizations.

- Control Hub Rest API

-

The Control Hub REST API includes the following enhancements:

- New PipelineStore Metrics API - Retrieves all pipelines created by users in a group within a specified time period.

- Security Metrics APIs - Additional Security Metrics APIs that

retrieve the following information:

- Retrieve all users that have logged in within a specified time period.

- Retrieve all users that have not logged in within a specified time period.

- Retrieve all users created within a specified time period.

- Retrieve all users that don’t belong to a specified group.

- Updated Configuration Files

-

The $DPM_CONF/jobrunner-app.properties configuration file includes the following new properties:

jobs.max.instances.autoscale.limit- Maximum number of pipeline instances which can be run for a job configured to automatically scale out pipeline processing.jobs.failover.threads- Maximum number of threads used for pipeline failover.

What's New in 3.16.x

StreamSets Control Hub version 3.16.x includes the following new features and enhancements:

- Pipelines and Pipeline Fragments

-

- A microservice sample pipeline is now available when creating a Data Collector pipeline from a sample pipeline.

- Pipeline Designer can

now use field information from data preview in the following ways:

- Some field properties have a Select Fields Using Preview Data icon that you can use to select fields from the last data preview.

- As you type a configuration, the list of valid values includes fields from the input and output schema extracted from the preview.

- Fields in the Schema tab and in the data preview have a Copy Field Path to Clipboard icon that you can use to copy a field path, which you can then paste where needed.

- The Pipelines view and the Pipeline Fragments view now display long lists over multiple pages.

- The Pipelines view and the Pipeline Fragments view now offer additional

support for filters:

- Click the Keep Filter Persistent checkbox to retain the last applied filter when you return to the view.

- Save or share the URL to reopen the view with the applied filter later or on a different browser.

- Snapshots

- You can now capture snapshots during pipeline test runs or job runs for Data Collector. You can view a

snapshot to see the pipeline records at a point in time and you can download the

snapshot file. The Data Collector

instance used for the snapshot depends on where you take the snapshot:

- Snapshots taken from a pipeline test run use the selected authoring Data Collector.

- Snapshots taken while monitoring a job use the execution Data Collector for the job run. When there is more than one execution Data Collector, the snapshot uses the Data Collector selected in the monitoring detailed view.

- Jobs

-

- From the Jobs view, you can now duplicate a job or job template to create one or more exact copies of an existing job or job template. You can then change the configuration and runtime parameters of the copies.

- The color of the job status in the Jobs view during deactivation depends on

how the job was deactivated:

- Jobs stopped automatically due to an error have a red deactivating status.

- Jobs stopped as requested or as expected have a green deactivating status.

- The Jobs view now offers additional support for filters:

- Click the Keep Filter Persistent checkbox to retain the last applied filter when you return to the view.

- Save or share the URL to reopen the view with the applied filter later or on a different browser.

- The monitoring panel now shows additional information about job runs:

- The Summary tab shows additional metrics, such as record throughput.

- The History tab has a View Summary link that opens a Job Metrics Summary page for previous job runs.

- System Data Collector

- Administrators can now enable or disable the system Data Collector for use as the default authoring Data Collector in Pipeline Designer. By default, the system Data Collector is enabled for existing organizations, but disabled for new organizations.

- Control Hub REST API

- The Control Hub REST API

includes a new Control Hub

Metrics category that contains several RESTful APIs:

- Job Runner Metrics APIs retrieve metrics on job runs and executor uptime, CPU, and memory usage.

- Time Series APIs retrieve metrics on job runs and executor CPU and memory usage over time.

- Security Metrics APIs retrieve login and action audit reports.

- Organization Configuration

- System administrators can now configure the following organization properties at an organization level in addition to a

global level:

- System limit on the maximum number of job runs

- System limit on the maximum number of days before job status history is purged

- System limit on the maximum number of time series purge days

When organization administrators save organization properties, Control Hub verifies that the system limits are not exceeded.

- LDAP Authentication

- To help synchronize

Control Hub with the LDAP provider when using LDAP authentication, you can now configure

Control Hub to automatically create and deactivate users to match users in LDAP groups.

When enabled, Control Hub does the following:

- Creates Control Hub users when necessary to match users in the LDAP groups.

- Deactivates but does not delete users from Control Hub when removed from all LDAP groups linked to Control Hub.

By default, this process is disabled.

- Updated Configuration Files

- The following configuration files include new properties for this release:

- $DPM_CONF/security-app.properties

includes the following new properties to facilitate synchronization with

LDAP:

ldap.automaticResolutionEnabled- A flag to enable Control Hub to automatically add and deactivate users to match users in LDAP groups.ldap.resolutionFrequencyMillis- The number of milliseconds to wait between checks to add and deactivate users to match users in LDAP groups.

- $DPM_CONF/timeseries-app.properties

includes the following new properties to support deletion of the metrics

of inactive jobs older than a threshold that administrators configure

for an organization:

enable.metrics.history.purge- A flag to enable deletion of historical metrics.metrics.history.purge.init.delay.minutes- The number of minutes after the application starts until the first check for historical metrics to delete.metrics.history.purge.freq.minutes- The number of minutes between checks for historical metrics to delete.metrics.history.purge.batch.size- The maximum number of historical metrics to delete in one batch.

Do not change the values of these properties without guidance from StreamSets Support.

- $DPM_CONF/security-app.properties

includes the following new properties to facilitate synchronization with

LDAP:

What's New in 3.15.x

StreamSets Control Hub version 3.15.x includes the following new features and enhancements:

- Pipeline Fragments

- When you reuse pipeline fragments in the same pipeline, you can now specify different values for the runtime parameters in each instance of the fragment. When adding a pipeline fragment to a pipeline, you specify a prefix for parameter names, and Pipeline Designer automatically adds that prefix to each runtime parameter in the pipeline fragment.

- Jobs

- To improve performance and scalability, this release introduces a process to

manage job history. Now, Control Hub automatically

deletes the history and metrics associated with a job on a predetermined basis.

By default, Control Hub:

- Retains the job history for the last 10 job runs. Administrators can increase the retention to at most 100 job runs.

- Retains the job history for 15 days from each retained job run. The history for each job run can contain at most 1,000 entries. Administrators can increase the retention to at most 60 days of job history.

- Retains job metrics only for jobs that have been active within the past 6 months.

- Integration of Cloud-Native Transformer Application

- You can use Helm charts to run Transformer as a cloud-native application in a

Kubernetes cluster. Control Hub can now generate a Helm script that inserts the Transformer authentication

token and Control Hub URL into

a Helm chart.

You can use Transformer running inside a Kubernetes cluster to launch Transformer pipeline jobs outside the Kubernetes cluster, such as those in a Databricks cluster, EMR cluster, or Azure HDInsight cluster. However, you can run a Transformer pipeline from the Kubernetes cluster without any additional Spark installation support from other vendors.

- Updated Configuration Files

-

The following updated configuration files include new properties for this release:

- $DPM_CONF/jobrunner-app.properties

now includes the following new properties to support purging of

deleted jobs and to support automatic deletion of job history based

on thresholds that administrators configure for an organization:

job.status.history.purge.batch.size- The number of job status history entries purged in a batch.system.limit.job.status.history.records- The maximum number of records permitted in the job status history for each run.

Do not change the values of these properties without guidance from StreamSets Support.

- $DPM_CONF/timeseries-app.properties

now includes the following new properties to support purging of

metrics of deleted jobs and inactive jobs older than a threshold

that administrators configure for an organization:

enable.metrics.purge- A flag to enable purging of metrics.metrics.purge.init.delay.minutes- The number of minutes after the application starts until the first check for metrics to purge.metrics.purge.freq.minutes- The number of minutes between checks for metrics to purge.metrics.purge.batch.size- The maximum number of metrics to purge in one batch.metrics.purge.batch.pause.millis- The number of milliseconds to pause between each batch.metrics.fetch.batch.size- The maximum number of metrics to fetch for purging.

Do not change the values of these properties without guidance from StreamSets Support.

- $DPM_CONF/jobrunner-app.properties

now includes the following new properties to support purging of

deleted jobs and to support automatic deletion of job history based

on thresholds that administrators configure for an organization:

What's New in 3.14.x

StreamSets Control Hub version 3.14.x includes the following new features and enhancements:

- Data Protector

-

- Import and export policies - You can now import and export policies and

their associated procedures. This enables you to share policies with

different organizations, as from a development to a production

organization.

Import or export policies from the Protection Policies view.

- Category name assist in procedures - When you configure a procedure based on a category pattern, a list of matching category names displays when you begin typing the name. You can select the category name to use from the list of potential matches.

- Policy enactment change - Policies are no longer restricted to being

used only upon read or only upon write. A policy can now be used in

either case. As a result, the following changes have occurred:

- When previewing data or configuring a job, you can now select any policy for the read and for the write.

- You can now select any policy as the default read or write policy for an organization. You can even use the same policy as the default read policy and the default write policy.

- Import and export policies - You can now import and export policies and

their associated procedures. This enables you to share policies with

different organizations, as from a development to a production

organization.

- UI Improvements

- To improve usability, the Pipelines, Pipeline Fragments, Reports, and Jobs views change the positions of some fields.

- Data Collectors and Edge Data Collectors

- You can now configure resource thresholds for any registered Data Collector or Data Collector Edge. When starting, synchronizing, or balancing jobs, Control Hub ensures that a Data Collector or Data Collector Edge does not exceed its resource thresholds for CPU load, percent memory used, and number of pipelines running.

- Balancing Jobs

- From the Registered Data Collectors list, you can now balance jobs that are enabled for failover and running on selected Data Collectors to distribute pipeline load evenly. When balancing jobs, Control Hub redistributes jobs based on assigned labels, possibly distributing jobs to Data Collectors not selected.

- Organization Security

- When creating or editing a group, you can now click links to clear any assigned roles or select all available roles.

- Updated Configuration Files

- The following updated configuration files include new properties for this

release:

$DPM_CONF/jobrunner-app.propertiesnow includes the following new properties to facilitate purging of deleted jobs:purge.job.immediate- A flag to purge jobs immediately upon delete rather than archiving them. The default value keeps existing functionality unchanged.enable.job.purge- A flag to enable purging of jobs.job.purge.age.days- The age of deleted jobs that the application purges.enable.active.job.purge- A flag to enable purging of deleted jobs shown as active.job.purge.init.delay.minutes- The number of minutes after the application starts until the first check for jobs to purge.job.purge.freq.minutes- The number of minutes between checks of jobs to purge.job.purge.batch.size- The maximum number of jobs to purge in one batch.job.purge.batch.pause.millis- The number of milliseconds to pause between each batch.enable.job.status.purge- A flag to enable purging of the job status.job.status.purge.exec.limit- Maximum number of job status records to retain.enable.job.status.history.purge- A flag to enable purging of the job status history.

Do not change the values of these properties without guidance from StreamSets Support.

$DPM_CONF/messaging-app.propertiesnow includes the following new properties to facilitate automatic deletion of excessive messages:event.delete.threshold- The maximum number of events in the queue before the application begins automatically deleting excessive messages.event.delete.chunk- The number of events deleted together as a chunk.event.delete.frequency.millis- The number of milliseconds between checks for excessive messages to delete.event.delete.initial.delay.millis- The number of milliseconds after the start of the messaging application before the first check for excessive messages to delete.event.delete.apps.to.monitor- A comma-separated list of applications monitored for automatic deletion of excessive messages.event.type.ids.to.monitor- A comma-separated list of event-type IDs monitored for automatic deletion of excessive messages.

In most cases, the default values for these properties should work.

What's New in 3.13.x

StreamSets Control Hub version 3.13.x includes the following new features and enhancements:

- Data Protector

-

- Classification preview - You can now preview how StreamSets and custom

classification rules classify data. You can use the default JSON test

data or supply your own test data for the preview.

This is a Technology Preview feature that is meant for development and testing only.

- Pipeline preview with policy selection - When you preview pipeline data,

you can now configure the read and write protection policies to use

during the preview.

This is a Technology Preview feature that is meant for development and testing only.

- Cluster mode support - Data Protector now supports protecting data in cluster mode pipelines.

- Encrypt Data protection method - You can now use the Encrypt Data protection method to encrypt sensitive data.

- UI enhancements:

- The “Classification Rules” view has been renamed “Custom Classifications.”

- The Custom Classifications view and Protection Policies view are now available under a new Data Protector link in the navigation panel.

- Protection policies now list procedures horizontally instead of vertically.

- Classification preview - You can now preview how StreamSets and custom

classification rules classify data. You can use the default JSON test

data or supply your own test data for the preview.

- Subscriptions

- When configuring an email action for a job status change event, you can now use a parameter to have an email sent to the owner of the job.

- Organization Security

- This release includes the following improvements for managing users:

- The Add User dialog box now has links to clear any assigned roles or select all available roles.

- After you create a user account or reset the password of a user account, Control Hub now sends an email with a link to set a new password.

- Accessing Metrics with the REST API

-

You can now use the Control Hub REST API to access the count of input records, output records, and error records, as well as the last reported metric time for a job run.

- New Configuration File

- This release includes a new configuration file, $DPM_CONF/dynamic_preview-app.properties, used to configure the Dynamic Preview (Data Protector) application.

- Updated Configuration Files

- The following updated configuration files include new properties for this

release:

- $DPM_CONF/common-to-all-apps.properties now

includes a new property,

db.slow.query.interval.millis, which defines the number of milliseconds that a query must run before Control Hub logs a warning. In most cases, the default for this property should work. $DPM_CONF/jobrunner-app.propertiesnow includes a new property,failover.initial.delay.millis, which defines the number of milliseconds to wait before Control Hub starts the failover thread. In most cases, the default for this property should work.$DPM_CONF/security-app.propertiesnow includes the propertyreset.token.expiry.in.days, which defines the number of days until the link to set a password expires. This property replaces the same property in thecommon-to-all-apps.propertiesfile. In most cases, the default value for this property should work.

- $DPM_CONF/common-to-all-apps.properties now

includes a new property,

What's New in 3.12.x

StreamSets Control Hub version 3.12.x includes the following new features and enhancements:

- Uploading Offsets with the REST API

- You can now upload a valid offset with the Control Hub REST API, which uploads the offset as JSON data.

- Updated Configuration File

- The $DPM_CONF/common-to-all-apps.properties configuration

file now includes a new property,

app.threads, which defines the number of threads each application uses. In most cases, the default for this property should work.

What's New in 3.11.x

StreamSets Control Hub version 3.11.x includes the following new features and enhancements:

- Transformer Integration

- This release integrates StreamSets Transformer in Control Hub.

- Pipeline Design

- Pipeline Designer includes the following enhancements:

- Delete a draft pipeline or fragment in Pipeline Designer - While editing a draft version of a pipeline or fragment, you can now delete that draft version to revert to the previous published version of the pipeline or fragment. Previously, you could not delete a draft pipeline or fragment that was open in Pipeline Designer. You had to view the pipeline history, and then select the draft version to delete.

- View the input and output schema for each stage - After running preview

for a pipeline, you can now view the input and output schema for each

stage on the Schema tab in the pipeline properties panel. The schema

includes each field name and data type.

Use the Schema tab when you configure pipeline stages that require field names. For example, let’s say you are configuring a Field Type Converter processor to convert the data type of a field by name. You can run preview, copy the field name from the Schema tab, and then paste the field name into the processor configuration.

- Bulk update pipelines to use a different fragment version - When viewing a published pipeline fragment in Pipeline Designer, you can now update multiple pipelines at once to use a different version of that fragment. For example, if you edit a fragment and then publish a new version of the fragment, you can easily update all pipelines using that fragment to use the latest version.

- Import a new version of a published pipeline in Pipeline Designer - While viewing a published pipeline in Pipeline Designer, you can import a new version of the pipeline. You can import any pipeline exported from Data Collector for use in Control Hub or any pipeline exported from Control Hub as a new version of the current pipeline.

- User-defined sample pipelines - You can now create a

user-defined sample pipeline by assigning the

templatespipeline label to a published pipeline. Users with read permission on the published pipeline can select the pipeline as a user-defined sample when developing a new pipeline. - Test run of a draft pipeline - You can now perform a test run of a draft pipeline in Pipeline Designer. Perform a test run of a draft pipeline to quickly test the pipeline logic. You cannot perform a test run of a published pipeline. To run a published pipeline, you must first add the pipeline to a job and then start the job.

- Shortcut keys to undo and redo actions - You can now use the

following shortcut keys to easily undo and redo actions in Pipeline

Designer:

- Press Command+Z to undo an action.

- Press Command+Shift+Z to redo an action.

- Jobs

- Jobs include the following enhancements:

- Monitoring errors - When you monitor an active Data Collector job with a pipeline stage that encounters errors, you can now view details about each error record on the Errors tab in the Monitor panel.

- Export and import job templates - When you export and import a job template, the template is now imported as a job template. You can then create job instances from that template in the new organization. You cannot export and import a job instance. Previously, when you exported and imported a job template or a job instance, the imported job template or instance functioned as a regular job in the new organization.

- Subscriptions

- You can now configure a subscription action for a changed job status color. For example, you might create a subscription that sends an email when a job status changes from active green to active red.

- Roles

- All Data Collector roles have been renamed to Engine roles and now enable performing tasks in registered Data

Collectors and registered Transformers.

For example, the Data Collector Administrator role has been renamed to the Engine Administrator role. The Engine Administrator role now allows users to perform all tasks in registered Data Collectors and registered Transformers.

- Provisioned Data Collectors

- Provisioned Data Collectors include the following enhancements:

- Upload the deployment YAML specification file - When you create a deployment, you can now upload the deployment YAML specification file instead of copying the contents of the file in the YAML Specification property.

- View YAML specification file for active deployments - You can now view the contents of the YAML specification file when you view the details of an active deployment.

- Configurable Kerberos principal user name - When you define a deployment YAML specification file to provision

Data Collector containers enabled for Kerberos authentication, you can

now optionally define the Kerberos principal user name to use for the

deployment. If you do not define a Kerberos user name, the Provisioning

Agent uses

sdcas the user name.

- Updated Configuration Files

- The following updated configuration files include new properties for this

release:

- common-to-all-apps.properties

The $DPM_CONF/common-to-all-apps.properties file includes a new property that defines a timeout for all queries to the Control Hub databases. It also includes a new property that defines the number of objects to check for permissions in a single query when a user views objects of that type. In most cases, the defaults for these properties should work.

- notification-app.properties

The $DPM_CONF/notification-app.properties file includes a new should.use.check.frequency.millis property that is set to false so that the Notification application calculates the value of the check.frequency.millis property. Do not change the default value of false.

- common-to-all-apps.properties

What's New in 3.10.x

StreamSets Control Hub version 3.10.x includes the following new features and enhancements:

- Installation Requirements

-

Control Hub includes the following enhancements to the installation requirements:

- Relational database - PostgreSQL 9.6 is now supported.

- Time series database - InfluxDB 1.7 is now supported.

- Pipeline Design

-

Pipeline Designer includes the following enhancements:

- Preview time zone - You can now select the time zone to use for the preview of date, datetime, and time data. Previously, preview always displayed data using the browser time zone.

- Compare pipeline versions - When you compare pipeline

versions, you can now click the name of either pipeline version to

open that version in the pipeline canvas.

Previously, you had to return to the Navigation panel and then select the pipeline version from the Pipeline Repository to open one of the versions in the pipeline canvas.

- Jobs

- You can now upload an initial offset file for a job. Upload an initial offset file when you first run a pipeline in Data Collector, publish the pipeline to Control Hub, and then want to continue running the pipeline from the Control Hub job using the last-saved offset maintained by Data Collector.

- SAML Authentication

-

When SAML authentication is enabled, users with the new Control Hub Authentication role can complete the following tasks that require users to be authenticated by Control Hub:

- Use the Data Collector command line interface.

- Log into a Data Collector running in disconnected mode.

- Use the Control Hub REST API.

Previously, only users with the Organization Administrator role could complete these tasks when SAML authentication was enabled.

- Provisioned Data Collectors

- The Control Agent Docker image version 3.10.0 now

requires that each YAML specification file that defines a deployment use the

Kubernetes API version

apps/v1. Previously, the Control Agent Docker image required that each YAML specification file use the API versionextensions/v1beta1for a deployment. Kubernetes has deprecated theextensions/v1beta1version for deployments. - Updated Configuration File

- The $DPM_CONF/security-app.properties file includes a new property that defines the welcome email sent to new users when SAML authentication is enabled for the organization

What's New in 3.9.0

- Sensitive Data in Configuration Files

- You can now protect

sensitive data in Control Hub configuration files by storing the data in an external location and then

using the

execfunction to call a script or executable that retrieves the data. For example, you can develop a script that decrypts an encrypted file containing a password. Or you can develop a script that calls an external REST API to retrieve a password from a remote vault system. - Updated Configuration Files

- The following updated configuration files include new properties for this

release:

- dpm.properties

The $DPM_CONF/dpm.properties file includes a new

ui.pendo.enabledproperty that determines whether Control Hub enables tracking tools that send UI usage data to StreamSets and that receive product announcements and onboarding guides from StreamSets. - jobrunner-app.properties

The $DPM_CONF/jobrunner-app.properties file includes a new

should.use.check.frequency.millisproperty that is set to false so that the Job Runner application calculates the value of thecheck.frequency.millisproperty. Do not change the default value of false.

- dpm.properties

What's New in 3.8.0

StreamSets Control Hub version 3.8.0 includes the following new features and enhancements:

- Provisioned Data Collectors

- You can now create a Provisioning Agent that provisions Data Collector containers enabled for Kerberos authentication.

- Jobs

- You can now upgrade active jobs to use the latest pipeline version. When you upgrade an active job, Control Hub stops the job, updates the job to use the latest pipeline version, and then restarts the job.

- Pipeline Design

- When you use the Control Hub Pipeline Designer to design pipelines, you can now call pipeline parameters for properties that display as checkboxes and drop-down menus. The parameters must evaluate to a valid option for the property.

- Permissions

- You can now share and grant permissions on multiple objects at the same time.

- Subscriptions

- When you create a subscription, you now configure the subscription in a single dialog box instead of clicking through multiple pages.

- LDAP Authentication

- When Control Hub uses LDAP authentication, organization administrators that have configured a disconnected mode password for their user account can now log into registered Data Collectors that are running in disconnected mode.

- Updated Configuration File

- The $DPM_CONF/jobrunner-app.properties file includes the

following new properties:

always.migrate.offsets- Determines whether Control Hub always migrates job offsets to another available Data Collector assigned all labels specified for the job when you stop and restart a job. Default is false.failover.check.freq.millis- The time in milliseconds between Control Hub checks for pipelines that should be failed over to another Data Collector. Default is 60,000 milliseconds.

What's New in 3.7.1

StreamSets Control Hub version 3.7.1 includes the following new features and enhancements:

- Upgrade

-

When you update schemas in the relational database, you now run the same database initialization script for all upgrades. Previously, you had to run different commands based on the version that you were upgrading from.

- Preview in Pipeline Designer

-

Pipeline Designer can now display preview data in table view.

- Subscriptions

-

Subscriptions include the following enhancements:

- Pipeline status change event - You can now configure a subscription action for a changed pipeline status. For example, you might create a subscription that sends an email when a pipeline status changes to RUN_ERROR.

- Expression completion to filter events - You can now use expression completion to determine the functions and parameters that you can use for each subscription filter.

- Scheduler

-

The Control Hub scheduler can now stop a job at a specified frequency. For example, you might want to run a streaming job every day of the week except for Sunday. You create one scheduled task that starts the job every Monday at 12:00 am. Then, you create another scheduled task that stops the same job every Sunday at 12:00 am.

- SAML Authentication

-

When you map a Control Hub user account to a SAML IdP user account, the SAML User Name property now defaults to the email address associated with the Control Hub user account. Previously, the default value was the user ID associated with the Control Hub user account.

What's New in 3.6.0

StreamSets Control Hub version 3.6.0 includes the following new features and enhancements:

- Installation

- The Control Hub installation

process includes the following enhancements:

- MariaDB support - Control Hub now supports MariaDB in addition to MySQL and PostgreSQL for the relational database that stores metadata written by Control Hub applications.

- Setting up Control Hub - During the Control Hub installation process, when you run the Control Hub setup script to configure Control Hub properties, you no longer need to enter both a Control Hub Base URL and a Load Balancer URL. The duplicate Load Balancer URL property has been removed. If you are installing multiple instances of Control Hub for high availability, you simply enter the load balancer URL for the Control Hub Base URL.

- Control Hub license temporarily activated - When you generate a unique system ID after installing Control Hub, your license is now temporarily activated for seven days. This way, you can start and log in to Control Hub while you wait for your permanent activation key from StreamSets.

- Data Protector

-

This release supports the latest version of Data Protector, Data Protector 1.4.0.

- Preview in Pipeline Designer

- You can now preview multiple stages in Pipeline Designer. When you preview multiple stages, you select the first stage and the last stage in the group. The Preview panel then displays the output data of the first stage in the group and the input data of the last stage in the group.

- Job Templates

- When you create a job for a pipeline that uses runtime parameters, you can now enable the job to work as a job template. A job template lets you run multiple job instances with different runtime parameter values from a single job definition.

- Subscribe to Unresponsive Data Collector or Data Collector Edge Events

- You can now configure a subscription action for a Data Collector or Data Collector Edge not responding event. For example, you might create a subscription that sends an alert to a Slack channel when a registered Data Collector stops responding.

- Updated Configuration File

- The $DPM_CONF/common-to-all-apps.properties file no longer

includes the duplicate

http.load.balancer.urlproperty. If you installed multiple instances of Control Hub for high availability, you simply enter the load balancer URL for thedpm.base.urlproperty.

What's New in 3.5.0

StreamSets Control Hub version 3.5.0 includes the following new features and enhancements:

- StreamSets Data Protector

- You can now use StreamSets Data Protector to perform global in-stream discovery and protection of data in motion with Control Hub.

- Pipeline Designer

- Pipeline Designer includes the following enhancements:

- Expression completion - Pipeline Designer now completes expressions in stage and pipeline properties to provide a list of data types, runtime parameters, fields, and functions that you can use.

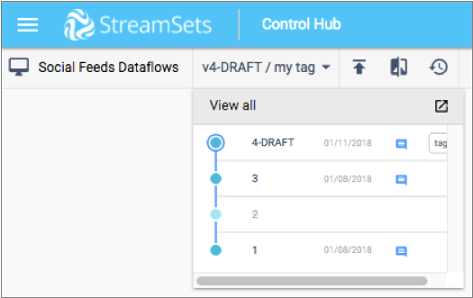

- Manage pipeline and fragment versions - When

configuring a pipeline or pipeline fragment in Pipeline Designer,

you can now view the following visualization of the pipeline or

fragment version history:

When you expand the version history, you can manage the pipeline or fragment versions including comparing versions, creating tags for versions, and deleting versions.

- Pipeline fragment expansion in pipelines - You can now expand and collapse

individual pipeline fragments when used in a pipeline. Previously,

expanding a fragment meant that all fragments in the pipeline were

expanded.

When a fragment is expanded, the pipeline enters read-only mode allowing no changes. Collapse all fragments to make changes to the pipeline.

- Preview and validate edge pipelines - You can now use Pipeline Designer to preview and validate edge pipelines.

- Shortcut menu for stages - When you select

a stage in the canvas, a shortcut menu now displays with a set of

options:

- For a pipeline fragment stage, you can copy, expand or delete the fragment.

- For all other stages, you can copy or delete the stage, or create a pipeline fragment using the selected stage or set of stages.

- Failover Retries for Jobs

- When a job is enabled for failover, Control Hub by default retries the pipeline failover an infinite number of times. If you want the pipeline failover to stop after a given number of retries, you can now define the maximum number of retries to perform. Control Hub maintains the failover retry count for each available Data Collector.

- Starting Jobs with the REST API

- You can now define runtime parameter values for a job when you start the job using the Control Hub REST API.

- Data Collectors

- You can now use an automation tool such as Ansible, Chef, or Puppet to automate

the registering and unregistering of Data Collectors using the following commands:

streamsets sch register streamsets sch unregister - Enabling HTTPS for Control Hub

- If working with a Control Hub on-premises installation enabled for HTTPS in a test or development environment, you can now configure Data Collector Edge (SDC Edge) to skip verifying the Control Hub trusted certificates. StreamSets highly recommends that you configure SDC Edge to verify trusted certificates in a production environment.

- New Configuration Files

- This release includes the following new configuration files located in the

$DPM_CONF directory:

policy-app.properties- Used to configure the Policy (Data Protector) application.sdp_classification-app.properties- Used to configure the Classification (Data Protector) application.

- Updated Configuration File

- The $DPM_CONF/dpm.properties file includes a new

ui.doc.help.urlproperty that determines whether Control Hub uses the help project installed with Control Hub or uses the help project hosted on the StreamSets website. Hosted help contains the latest available documentation and requires an internet connection.

What's New in 3.3.0

StreamSets Control Hub version 3.3.0 includes the following new features and enhancements:

- Pipelines and Pipeline Fragments

-

- Data preview enhancements:

- Data preview support for pipeline fragments - You can now use data preview with pipeline fragments. When using Data Collector 3.4.0 for the authoring Data Collector, you can also use a test origin to provide data for the preview. This can be especially useful when the fragment does not contain an origin.

- Edit data and stage properties - You can now edit preview data and stage properties, then run the preview with your changes. You can also revert data changes and refresh the preview to view additional data.

- Select multiple stages - When you design pipelines and pipeline fragments, you can now select multiple stages in the canvas by selecting the Shift key and clicking each stage. You can then move or delete the selected stages.

- Export enhancement - When you export a single

pipeline or a single fragment, the pipeline or fragment is now saved

in a zip file of the same name, as follows:

<pipeline or fragment name>.zip. Exporting multiple pipelines or fragments still results in the following file name:<pipelines|fragments>.zip. - View where fragments are used - When you view the details of a fragment, Pipeline Designer now displays the list of pipelines that use the fragment.

- Data preview enhancements:

- Jobs

-

- Runtime parameters enhancements - When you edit a job, you can now use the Get Default Parameters option to retrieve all parameters and their default values as defined in the pipeline. You can also use simple edit mode, in addition to bulk edit mode, to define parameter values.

- Pipeline failover enhancement - When determining which available Data Collector restarts a failed pipeline, Control Hub now prioritizes Data Collectors that have not previously failed the pipeline.

- Data Collectors

-

- Monitor Data Collector performance - When you view registered Data Collectors version 3.4.0 from the Execute view, you can now monitor the CPU load and memory usage of each Data Collector.

- Edge Data Collectors (SDC Edge)

-

- Monitor SDC Edge performance - When you view registered Edge Data Collectors version 3.4.0 from the Execute view, you can now monitor the CPU load and memory usage of each SDC Edge.

- Data Delivery Reports

-

- Destination statistics - Data delivery reports for jobs and topologies now contain statistics for destinations.

- Documentation

-

- Documentation enhancement - The online help has a new look and feel. All of the previous documentation remains exactly where you expect it, but it is now easier to view and navigate on smaller devices like your tablet or mobile phone.

What's New in 3.2.1

StreamSets Control Hub version 3.2.1 fixes the following known issues:

- Viewing pipeline details from the Topology view causes an error to occur.

- Time series charts for jobs cannot be viewed from the Topology view even though time series analysis is enabled.

- When a Kubernetes pod is restarted, the Provisioning Agent fails to register the Data Collector containers with Control Hub.

What's New in 3.2.0

StreamSets Control Hub version 3.2.0 includes the following new features:

- Activate the Control Hub License

-

Each Control Hub system now requires an active license. During the installation process, you use the Control Hub security command line program to generate a unique system ID and then request an activation key for that system ID from the StreamSets support team. After you receive the activation key, you use the security command line program to activate the license.

Each activation key is generated for a specific Control Hub system ID. If you install multiple Control Hub instances for a highly available system, you only need to activate the license once.

- Pipeline Fragments

- Control Hub now includes pipeline fragments. A pipeline fragment is a stage or set of connected stages that you can reuse in Data Collector or SDC Edge pipelines. Use pipeline fragments to easily add the same processing logic to multiple pipelines and to ensure that the logic is used as designed.

- Scheduler

-

Control Hub now includes a scheduler that manages long-running scheduled tasks. A scheduled task periodically triggers the execution of a job or a data delivery report at the specified frequency. For example, a scheduled task can start a job or generate a data delivery report on a weekly or monthly basis.

Before you can schedule jobs and data delivery reports, the Scheduler Operator role must be assigned to your user account.

- Data Delivery Reports

-

Control Hub now includes data delivery reports that show how much data was processed by a job or topology over a given period of time. You can create periodic reports with the scheduler, or create an on-demand report.

Before you can manage data delivery reports, the Reporting Operator role must be assigned to your user account.

- Jobs

-

- Edit a pipeline version directly from a job - When viewing the details of a job or monitoring a job, you can now edit the latest version of the pipeline directly from the job. Previously, you had to locate the pipeline in the Pipeline Repository view before you could edit the pipeline.

- Enable time series analysis - You can now enable time

series analysis for a job. When enabled, you can view

historical time series data when you monitor the job or a topology that

includes the job.

When time series analysis is disabled, you can still view the total record count and throughput for a job or topology, but you cannot view the data over a period of time. For example, you can’t view the record count for the last five minutes or for the last hour.

By default, all existing jobs have time series analysis enabled. All new jobs have time series analysis disabled. You might want to enable time series analysis for new jobs for debugging purposes or to analyze dataflow performance.

- Pipeline force stop timeout - In some situations when you stop a job, a remote pipeline instance can remain in a Stopping state for a long time. When you configure a job, you can now configure the number of milliseconds to wait before forcing remote pipeline instances to stop. The default time to force a pipeline to stop is 2 minutes.

- View logs- While monitoring an active job, the top toolbar now includes a View Logs icon that displays the logs for any remote pipeline instance run from the job.

- Subscriptions

-

- Email action - You can now create a subscription that listens for Control Hub events and then sends an email when those events occur. For example, you might send an email each time a job status changes.

- Pipeline committed event - You can configure an action for a pipeline committed event. For example, you might send a message when a pipeline is committed with the name of the user who committed it.

- Filter the events to subscribe to - You can now

use the StreamSets expression language to create an expression that

filters the events that you want to subscribe to. You can

include subscription parameters and StreamSets string functions in the

expression.For example, you might enter the following expression for a Job Status Change event so that the subscription is triggered only when the specified job ID encounters a status change:

${JOB_ID == '99efe399-7fb5-4383-9e27-e4c56b53db31:MyCompany'}If you do not filter the events, then the subscription is triggered each time an event occurs for all objects that you have at least read permission on.

- Permissions - When permission enforcement is enabled for your organization, you can now share and grant permissions on subscriptions.

- Provisioned Data Collectors

-

When you define a deployment YAML specification file for provisioned Data Collectors, you can now optionally associate a Kubernetes Horizontal Pod Autoscaler, service, or Ingress with the deployment.

Define a deployment and Horizontal Pod Autoscaler in the specification file for a deployment of one or more execution Data Collectors that must automatically scale during times of peak performance. The Kubernetes Horizontal Pod Autoscaler automatically scales the deployment based on CPU utilization.

Define a deployment and service in the specification file for a deployment of a single development Data Collector that must be exposed outside the cluster using a Kubernetes service. Optionally associate an Ingress with the service to provide load balancing, SSL termination, and virtual hosting to the service in the Kubernetes cluster.

- New Configuration Files

-

This release includes the following new configuration files located in the

$DPM_CONFdirectory:reporting-app.properties- Used to configure the Reporting application.scheduler-app.properties- Used to configure the Scheduler application.

What's New in 3.1.1

- View logs for an active job

- When monitoring an active job, you can now view the logs for a remote pipeline instance from the Data Collectors tab.

What's New in 3.1.0

StreamSets Control Hub version 3.1.0 includes the following new features:

- System Data Collector

-

You can now configure the system Data Collector connection properties when you run the Control Hub setup script. Previously, you had to modify the

$DPM_CONF/common-to-all-apps.propertiesto configure the system Data Collector properties. - Pipelines

-

Pipelines include the following enhancements:

- Duplicate pipelines - You can now select a pipeline in the Pipeline Repository view and then duplicate the pipeline. A duplicate is an exact copy of the original pipeline.

- Commit message when publishing pipelines - You can now enter commit messages when you publish pipelines from Pipeline Designer. Previously, you could only enter commit messages when you published pipelines from a registered Data Collector.

- Export and Import

-

You can now use Control Hub to export and import the following objects:

- Jobs and topologies - You can now export and

import jobs and topologies to migrate the objects from one

organization to another. You can export a single job or topology or

you can export a set of jobs and topologies.

When you export and import jobs and topologies, you also export and import dependent objects. For jobs, you also export and import the pipelines included in the jobs. For topologies, you also export and import the jobs and pipelines included in the topologies.

- Sets of pipelines - You can now select multiple pipelines in the Pipeline Repository view and export the pipelines as a set to a ZIP file. You can also now import pipelines from a ZIP file containing multiple pipeline files.

- Jobs and topologies - You can now export and

import jobs and topologies to migrate the objects from one

organization to another. You can export a single job or topology or

you can export a set of jobs and topologies.

- Alerts

-

The Notifications view has now been renamed the Alerts view.

- Subscriptions

-

You can now create a subscription that listens for Control Hub events and then completes an action when those events occur. For example, you might create a subscription that sends a message to a Slack channel each time a job status changes.