Kafka Consumer

The Kafka Consumer origin reads data from a single topic in an Apache Kafka cluster. To use multiple threads to read from multiple topics, use the Kafka Multitopic Consumer.

When you configure a Kafka Consumer, you configure the consumer group name, topic, and ZooKeeper connection information.

When using Kafka version 0.8.2 or later to consume messages in the Avro format, you can configure the Kafka Consumer to work with the Confluent Schema Registry. The Confluent Schema Registry is a distributed storage layer for Avro schemas which uses Kafka as its underlying storage mechanism.

You can add additional Kafka configuration properties as needed. When using Kafka version 0.9.0.0 or later, you can also configure the origin to use Kafka security features.

Kafka Consumer includes record header attributes that enable you to use information about the record in pipeline processing.

Initial and Subsequent Offsets

When you start a pipeline for the first time, the Kafka Consumer becomes a new consumer group for the topic.

By default, the origin reads only incoming data, processing data from all partitions and ignoring any existing data in the topic. After the origin passes data to destinations, it saves the offset with Kafka or ZooKeeper. When you stop and restart the pipeline, processing continues based on the offset.

For versions before Kafka 0.9.0.0, the offset is stored with Kafka or ZooKeeper based on the offsets.storage Kafka property. For Kafka version 0.9.0.0 or later, the offset is stored with Kafka.

Processing Available Data

You can configure the Kafka Consumer origin to read all unread data in a topic. By default, the Kafka Consumer origin reads only incoming data.

- On the Kafka tab, click the Add icon

to add a new Kafka configuration property.

You can use simple or bulk edit mode to add configuration properties.

- For the property name, enter auto.offset.reset.

- Define the value for the auto.offset.reset property:

- When using versions before Kafka 0.9.0.0, set the property to smallest.

- When using Kafka version 0.9.0.0 or later, set the property to earliest.

For more information about adding custom Kafka configuration properties, see Additional Kafka Properties.

Additional Kafka Properties

You can add custom Kafka configuration properties to the Kafka Consumer.

When you add the Kafka configuration property, enter the exact property name and the value. The Kafka Consumer does not validate the property names or values.

- auto.commit.enable

- group.id

- zookeeper.connect

Record Header Attributes

The Kafka Consumer origin creates record header attributes that include information about the originating file for the record. When the origin processes Avro data, it includes the Avro schema in an avroSchema record header attribute.

You can use the record:attribute or record:attributeOrDefault functions to access the information in the attributes. For more information about working with record header attributes, see Working with Header Attributes.

- avroSchema - When processing Avro data, provides the Avro schema.

- offset - The offset where the record originated.

- partition - The partition where the record originated.

- topic - The topic where the record originated.

Enabling Security

When using Kafka version 0.9.0.0 or later, you can configure the Kafka Consumer origin to connect securely through SSL/TLS, Kerberos, or both.

Earlier versions of Kafka do not support security.

Enabling SSL/TLS

Perform the following steps to enable the Kafka Consumer origin to use SSL/TLS to connect to Kafka version 0.9.0.0 or later. You can use the same steps to configure a Kafka Producer.

- To use SSL/TLS to connect, first make sure Kafka is configured for SSL/TLS as described in the Kafka documentation.

- On the General tab of the stage, set the Stage Library property to Apache Kafka 0.9.0.0 or a later version.

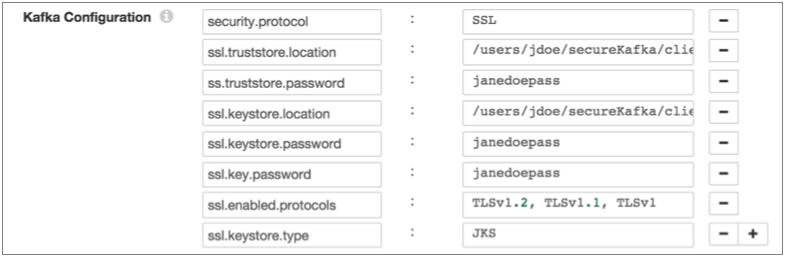

- On the Connection tab, add the security.protocol Kafka configuration property and set it to SSL.

- Then, add the following SSL Kafka configuration

properties:

- ssl.truststore.location

- ssl.truststore.password

When the Kafka broker requires client authentication - when the ssl.client.auth broker property is set to "required" - add and configure the following properties:- ssl.keystore.location

- ssl.keystore.password

- ssl.key.password

Some brokers might require adding the following properties as well:- ssl.enabled.protocols

- ssl.truststore.type

- ssl.keystore.type

For details about these properties, see the Kafka documentation.

For example, the following properties allow the stage to use SSL/TLS to connect to Kafka 0.9.0.0 with client authentication:

Enabling Kerberos (SASL)

When you use Kerberos authentication, Data Collector uses the Kerberos principal and keytab to connect to Kafka version 0.9.0.0 or later.

Perform the following steps to enable the Kafka Consumer origin to use Kerberos to connect to Kafka:

- To use Kerberos, first make sure Kafka is configured for Kerberos as described in the Kafka documentation.

- Make sure that Kerberos authentication is enabled for Data Collector, as described in Kerberos Authentication.

- Add the Java Authentication and Authorization

Service (JAAS) configuration properties required for Kafka clients based on your

installation type:

- RPM or tarball installation - Add the properties

to the JAAS configuration file used by Data Collector - the

$SDC_CONF/ldap-login.conf file. Add the following

properties to a client login section in the file named

KafkaClient:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<keytab path>" principal="<principal name>/<host name>@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/kafka_client.keytab" principal="kafka-client-1/sdc-01.streamsets.net@EXAMPLE.COM"; }; - Cloudera Manager installation - Add the

properties to the Data Collector Advanced Configuration Snippet

(Safety Valve) for generated-ldap-login-append.conf field for

the StreamSets service in Cloudera Manager. Add the properties to the

field as

follows:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="<principal name>/_HOST@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="kafka-client-1/_HOST@EXAMPLE.COM"; };Cloudera Manager generates the appropriate keytab path and host name.

- RPM or tarball installation - Add the properties

to the JAAS configuration file used by Data Collector - the

$SDC_CONF/ldap-login.conf file. Add the following

properties to a client login section in the file named

KafkaClient:

- On the General tab of the stage, set the Stage Library property to Apache Kafka 0.9.0.0 or a later version.

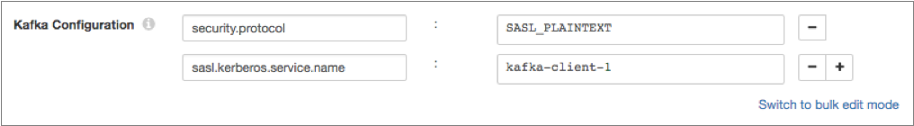

- On the Connection tab, add the security.protocol Kafka configuration property, and set it to SASL_PLAINTEXT.

- Then, add the sasl.kerberos.service.name configuration property, and set it to the same Kerberos principal name that you just defined in the JAAS configuration properties.

For example, the following Kafka properties enable connecting to Kafka 0.9.0.0 with Kerberos:

Enabling SSL/TLS and Kerberos

You can enable the Kafka Consumer origin to use SSL/TLS and Kerberos to connect to Kafka version 0.9.0.0 or later.

- Make sure Kafka is configured to use SSL/TLS and Kerberos (SASL) as described in the following Kafka documentation:

- Make sure that Kerberos authentication is enabled for Data Collector, as described in Kerberos Authentication.

- Add the Java Authentication and Authorization

Service (JAAS) configuration properties required for Kafka clients based on your

installation type:

- RPM or tarball installation - Add the properties

to the JAAS configuration file used by Data Collector - the

$SDC_CONF/ldap-login.conf file. Add the following

properties to a client login section in the file named

KafkaClient:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<keytab path>" principal="<principal name>/<host name>@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/kafka_client.keytab" principal="kafka-client-1/sdc-01.streamsets.net@EXAMPLE.COM"; }; - Cloudera Manager installation - Add the

properties to the Data Collector Advanced Configuration Snippet

(Safety Valve) for generated-ldap-login-append.conf field for

the StreamSets service in Cloudera Manager. Add the properties to the

field as

follows:

KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="<principal name>/_HOST@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="kafka-client-1/_HOST@EXAMPLE.COM"; };Cloudera Manager generates the appropriate keytab path and host name.

- RPM or tarball installation - Add the properties

to the JAAS configuration file used by Data Collector - the

$SDC_CONF/ldap-login.conf file. Add the following

properties to a client login section in the file named

KafkaClient:

- On the General tab of the stage, set the Stage Library property to Apache Kafka 0.9.0.0 or a later version.

- On the Connection tab, add the security.protocol property and set it to SASL_SSL.

- Then, add the sasl.kerberos.service.name configuration property, and set it to the same Kerberos principal name that you just defined in the JAAS configuration properties.

- Then, add the following SSL Kafka configuration

properties:

- ssl.truststore.location

- ssl.truststore.password

When the Kafka broker requires client authentication - when the ssl.client.auth broker property is set to "required" - add and configure the following properties:- ssl.keystore.location

- ssl.keystore.password

- ssl.key.password

Some brokers might require adding the following properties as well:- ssl.enabled.protocols

- ssl.truststore.type

- ssl.keystore.type

For details about these properties, see the Kafka documentation.

Data Formats

The Kafka Consumer origin processes data differently based on the data format. Kafka Consumer can process the following types of data:

- Avro

- Generates a record for every message. Includes a "precision" and "scale" field attribute for each Decimal field. For more information about field attributes, see Field Attributes.

- The origin writes the Avro schema to an avroSchema record header attribute. For more information about record header attributes, see Record Header Attributes.

- You can use one of the following methods to specify the location

of the Avro schema definition:

- Message/Data Includes Schema - Use the schema in the message.

- In Pipeline Configuration - Use the schema that you provide in the stage configuration.

- Confluent Schema Registry -

Retrieve the schema from Confluent Schema Registry.

The Confluent Schema Registry is a distributed

storage layer for Avro schemas. You can configure

the origin to look up the schema in the Confluent

Schema Registry by the schema ID embedded in the

message or by the schema ID or subject specified in

the stage configuration.

You must specify the method that the origin uses to deserialize the message. If the Avro schema ID is embedded in each message, set the key and value deserializers to Confluent on the Kafka tab.

- Using a schema in the stage configuration or retrieving a schema from the Confluent Schema Registry overrides any schema that might be included in the message and can improve performance.

- Binary

- Generates a record with a single byte array field at the root of the record.

- When the data exceeds the user-defined maximum data size, the origin cannot process the data. Because the record is not created, the origin cannot pass the record to the pipeline to be written as an error record. Instead, the origin generates a stage error.

- Datagram

- Generates a record for every message. The origin can process collectd messages, NetFlow 5 and NetFlow 9 messages, and the

following types of syslog messages:

- RFC 5424 (https://tools.ietf.org/html/rfc5424)

- RFC 3164 (https://tools.ietf.org/html/rfc3164)

- Non-standard common messages, such as RFC 3339 dates with no version digit

- When processing NetFlow messages, the stage generates different records based on the NetFlow version. When processing NetFlow 9, the records are generated based on the NetFlow 9 configuration properties. For more information, see NetFlow Data Processing.

- Delimited

- Generates a record for each delimited line. You can use the

following delimited format types:

- Default CSV - File that includes comma-separated values. Ignores empty lines in the file.

- RFC4180 CSV - Comma-separated file that strictly follows RFC4180 guidelines.

- MS Excel CSV - Microsoft Excel comma-separated file.

- MySQL CSV - MySQL comma-separated file.

- Postgres CSV - Postgres comma-separated file.

- Postgres Text - Postgres text file.

- Tab-Separated Values - File that includes tab-separated values.

- Custom - File that uses user-defined delimiter, escape, and quote characters.

- You can use a list or list-map root field type for delimited data, optionally including the header information when available. For more information about the root field types, see Delimited Data Root Field Type.

- When using a header line, you can allow processing records with additional columns. The additional columns are named using a custom prefix and integers in sequential increasing order, such as _extra_1, _extra_2. When you disallow additional columns when using a header line, records that include additional columns are sent to error.

- You can also replace a string constant with null values.

- When a record exceeds the maximum record length defined for the origin, the origin processes the object based on the error handling configured for the stage.

- JSON

- Generates a record for each JSON object. You can process JSON files that include multiple JSON objects or a single JSON array.

- When an object exceeds the maximum object length defined for the origin, the origin processes the object based on the error handling configured for the stage.

- Log

- Generates a record for every log line.

- When a line exceeds the user-defined maximum line length, the origin truncates longer lines.

- You can include the processed log line as a field in the record. If the log line is truncated, and you request the log line in the record, the origin includes the truncated line.

- You can define the log format or type to be read.

- Protobuf

- Generates a record for every protobuf message. By default, the origin assumes messages contain multiple protobuf messages.

- Protobuf messages must match the specified message type and be described in the descriptor file.

- When the data for a record exceeds 1 MB, the origin cannot continue processing data in the message. The origin handles the message based on the stage error handling property and continues reading the next message.

- For information about generating the descriptor file, see Protobuf Data Format Prerequisites.

- SDC Record

- Generates a record for every record. Use to process records generated by a Data Collector pipeline using the SDC Record data format.

- For error records, the origin provides the original record as read from the origin in the original pipeline, as well as error information that you can use to correct the record.

- When processing error records, the origin expects the error file names and contents as generated by the original pipeline.

- Text

- Generates a record for each line of text or for each section of text based on a custom delimiter.

- When a line or section exceeds the maximum line length defined for the origin, the origin truncates it. The origin adds a boolean field named Truncated to indicate if the line was truncated.

- For more information about processing text with a custom delimiter, see Text Data Format with Custom Delimiters.

- XML

- Generates records based on a user-defined delimiter element. Use an XML element directly under the root element or define a simplified XPath expression. If you do not define a delimiter element, the origin treats the XML file as a single record.

- Generated records include XML attributes and namespace declarations as fields in the record by default. You can configure the stage to include them in the record as field attributes.

- You can include XPath information for each parsed XML element and

XML attribute in field attributes. This also places each

namespace in an xmlns record header attribute. Note: Field attributes and record header attributes are written to destination systems automatically only when you use the SDC RPC data format in destinations. For more information about working with field attributes and record header attributes, and how to include them in records, see Field Attributes and Record Header Attributes.

- When a record exceeds the user-defined maximum record length, the origin skips the record and continues processing with the next record. It sends the skipped record to the pipeline for error handling.

- Use the XML data format to process valid XML documents. For more information about XML processing, see Reading and Processing XML Data.

-

Tip: If you want to process invalid XML documents, you can try using the text data format with custom delimiters. For more information, see Processing XML Data with Custom Delimiters.

Configuring a Kafka Consumer

Configure a Kafka Consumer to read data from a Kafka cluster.