Provisioned Databricks Cluster Requirements

When you use the PySpark processor in a pipeline that provisions a Databricks cluster, you must include several environment variables in the pipeline properties.

On the Cluster tab of the pipeline properties, add the following Spark environment variables to the cluster details configured in the Cluster Configuration property:

"spark_env_vars": {

"PYTHONPATH": "/databricks/spark/python/lib/py4j-<version>-src.zip:/databricks/spark/python:/databricks/spark/bin/pyspark",

"PYSPARK_PYTHON": "/databricks/python3/bin/python3",

"PYSPARK_DRIVER_PYTHON": "/databricks/python3/bin/python3"

} Note that the PYTHONPATH variable requires the py4j version used by the cluster.

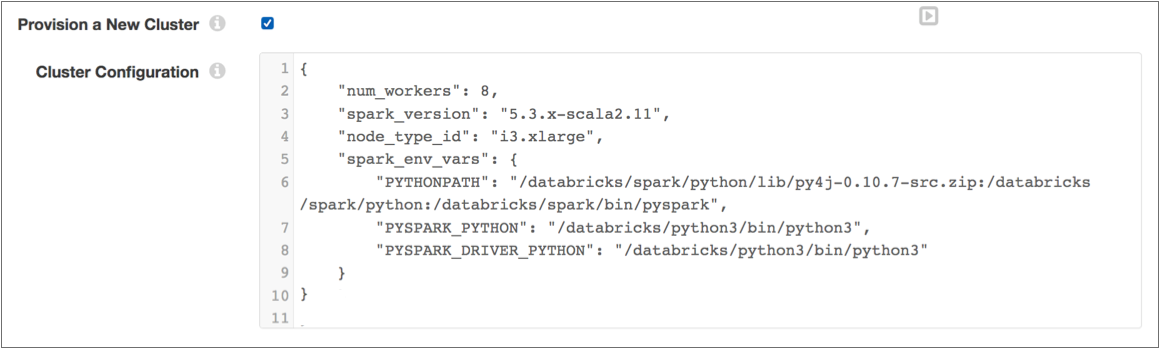

For example, the following cluster configuration provisions a Databricks cluster that uses py4j version 0.10.7 to run a pipeline with a PySpark processor:

For more information about provisioning a Databricks cluster, see Provisioned Cluster.