Streaming Case Study

Transformer can run pipelines in streaming mode. A streaming pipeline maintains connections to origin systems and processes data at user-defined intervals. The pipeline runs continuously until you manually stop it.

A streaming pipeline is typically used to process data in stream processing platforms such as Apache Kafka.

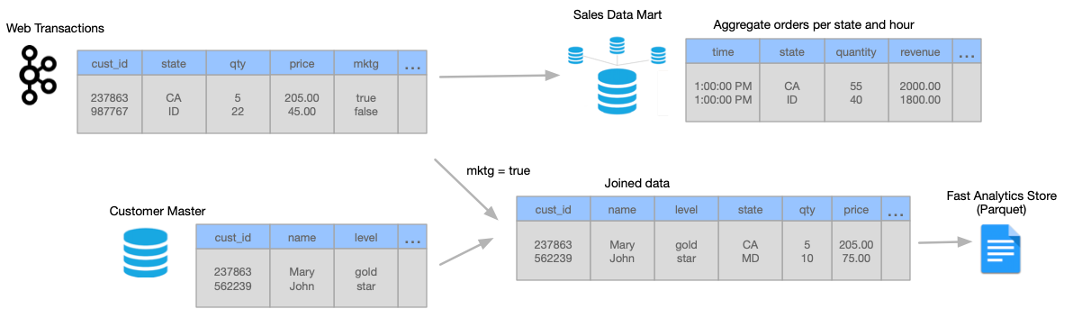

Let's say that your website transactions are continuously being sent to Kafka. The website transaction data includes the customer ID, shipping address, product ID, quantity of items, price, and whether the customer accepted marketing campaigns.

You need to create a data mart for the sales team that includes aggregated data about the online orders, including the total revenue for each state by the hour. Because the transaction data continuously arrives, you need to produce one-hour windows of data before performing the aggregate calculations.

You also need to join the same website transaction data with detailed customer data from your data warehouse for the customers accepting marketing campaigns. You must send this joined customer data to Parquet files so that data scientists can efficiently analyze the data. To increase the analytics performance, you need to write the data to a small set of Parquet files.

The following image shows a high-level design of the data flow and some of the sample data:

You can use Transformer to create and run a single streaming pipeline to meet all of these needs.

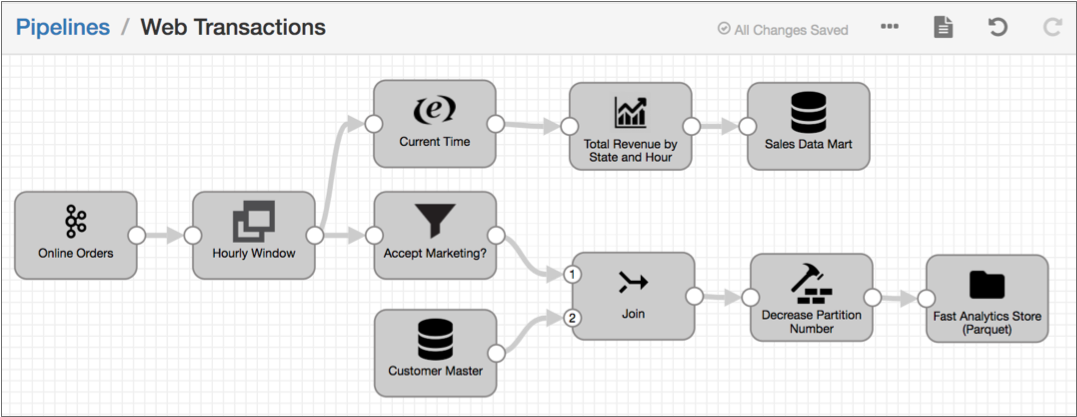

Let's take a closer look at how you design the streaming pipeline:

- Set execution mode to streaming

- On the General tab of the pipeline, you set the execution mode to streaming. You also specify a trigger interval that defines the time that the pipeline waits before processing the next batch of data. Let's say you set the interval to 1000 milliseconds - that's 1 second.

- Read from Kafka and then create one-hour windows of data

- You add a Kafka origin to the Transformer pipeline, configuring the origin to read from the

weborderstopic in the Kafka cluster. - Aggregate data before writing to the data mart

- You create one pipeline branch that performs the processing needed for the sales data mart.

- Filter, join, and repartition the data before writing to Parquet files

- You create a second pipeline branch to perform the processing needed for the Parquet files used by data scientists.

The following image shows the complete design of this streaming pipeline:

When you start this streaming pipeline, the pipeline reads the available online order data in Kafka. The pipeline reads customer data from the database once, storing the data on the Spark nodes for subsequent lookups.

Each processor transforms the data, and then each destination writes the data to the data mart or to Parquet files. After processing all the data in a single batch, the pipeline waits 1 second, then reads the next batch of data from Kafka and reads the database data stored on the Spark nodes. The pipeline runs continuously until you manually stop it.