Orchestration Record

An orchestration origin generates an orchestration record that contains details about the task that it performed, such as the IDs of the jobs or pipelines that it started and the status of those jobs or pipelines.

As this record passes through the pipeline, each orchestration stage updates the record, adding details about the task that it performed. When the orchestration pipeline completes, the resulting orchestration record provides information about all of the orchestration tasks that the pipeline performed.

For details about how each orchestration stage creates or updates an orchestration record, see "Generated Record" in the stage documentation.

Example

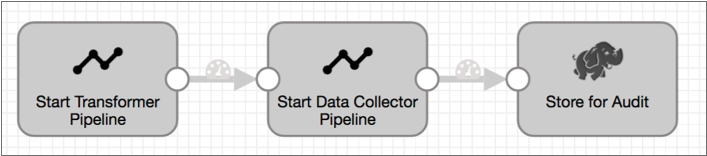

Say you have the following orchestration pipeline with a Start Pipelines origin that starts a Transformer pipeline, a Start Pipelines processor that starts a Data Collector pipeline, and a Hadoop FS destination to store the resulting orchestration record. Both Start Pipelines stages run the pipelines in the foreground, so they wait until their pipelines complete before passing the orchestration record downstream:

This orchestration pipeline generates the following orchestration record:

{

"orchestratorTasks": {

"Start_Transformer_Pipeline": {

"pipelineIds": [

"LoadADLS82ea742b-4091-493c-9baf-07341d45564c"

],

"pipelineResults": {

"LoadTable82ea742b-4091-493c-9baf-07341d45564c": {

"pipelineId": "LoadADLS82ea742b-4091-493c-9baf-07341d45564c",

"pipelineTitle": "Load ADLS",

"startedSuccessfully": true,

"finishedSuccessfully": true,

"pipelineMetrics": {

"pipeline": {

"outputRecords": 100000500,

"inputRecords": 100000500

},

"stages": {

"<origin1>": {

"outputRecords": 100000500

},

"<processor1>": {

"outputRecords": 100000500,

"inputRecords": 0

},

"<processor2>": {

"outputRecords": 100000500,

"inputRecords": 100000500

},

"<destination>": {

"outputRecords": 100000500,

"inputRecords": 100000500

}

}

},

"pipelineStatus": "FINISHED",

"pipelineStatusMessage": null,

"committedOffsetsStr": "{<offset info>}"

}

},

"success": true

},

"Start_Data_Collector_Pipeline": {

"pipelineIds": [

"SalesProc2a4aa879-ebe2-44f3-be67-f3e95588be6e"

],

"pipelineResults": {

"SalesProc2a4aa879-ebe2-44f3-be67-f3e95588be6e": {

"pipelineId": "SalesProc2a4aa879-ebe2-44f3-be67-f3e95588be6e",

"pipelineTitle": "Sales Processing",

"startedSuccessfully": true,

"finishedSuccessfully": true,

"pipelineMetrics": {

"pipeline": {

"outputRecords": 121130,

"errorMessages": 0,

"inputRecords": 121130,

"errorRecords": 0

},

"stages": {

"<origin>": {

"outputRecords": 121130

},

"<processor>": {

"outputRecords": 121130,

"errorMessages": 0,

"inputRecords": 121130,

"errorRecords": 0

},

"<destination>": {

"outputRecords": 121130,

"errorMessages": 0,

"inputRecords": 121130,

"errorRecords": 0

}

}

},

"pipelineStatus": "FINISHED",

"pipelineStatusMessage": null,

"committedOffsetsStr": "{<offset info>}"

}

},

"success": true

}

}

}- The Start_Transformer_Pipeline task performed by the Start Pipelines origin starts the

Load ADLSTransformer pipeline.From the

startedSuccessfullyandfinishedSuccessfullyfields. you can see that the pipeline started and completed successfully before the record was passed downstream.You can also see the pipeline and stage metrics provided by the origin.

- The Start_Data_Collector_Pipeline task performed by the Start Pipelines processor starts

the

Sales ProcessingData Collector pipeline.This pipeline also started and completed successfully, and the pipeline metrics are included in the orchestration record.